CODECO stands for Cognitive Decentralized Edge to Cloud Orchestration. The open source software framework, pluggable to Kubernetes, aims to improve the energy efficiency and robustness of edge-cloud infrastructure by improving application deployment and runtime.

by Dean Kelly, Alka Nixon, and Colm Dunphy

The digital landscape is evolving rapidly, driven by the increasing demands of intelligent services across domains like smart mobility, Industry 4.0, and eHealth. With nearly 40% of IoT data now processed at the network’s edge, traditional cloud paradigms are demonstrating significant limitations, particularly in the context of next-generation mobile IoT and the emerging Internet of Nanothings.

These evolving environments require scalable, mobile, and dynamic infrastructures. Edge computing and decentralized Edge-Cloud architectures have become essential to meet these needs. In the era of 5G and 6G networks, applications must be lightweight, portable, and context-aware, while being capable of real-time decision-making on data computation, placement, and security.

Enter CODECO: a cognitive, decentralized Edge-Cloud management framework purpose-built to overcome the unique challenges of this new era. CODECO reimagines the Edge-Cloud continuum with a focus on dynamic interoperability, secure data flows, and intelligent orchestration, paving the way for next-generation services.

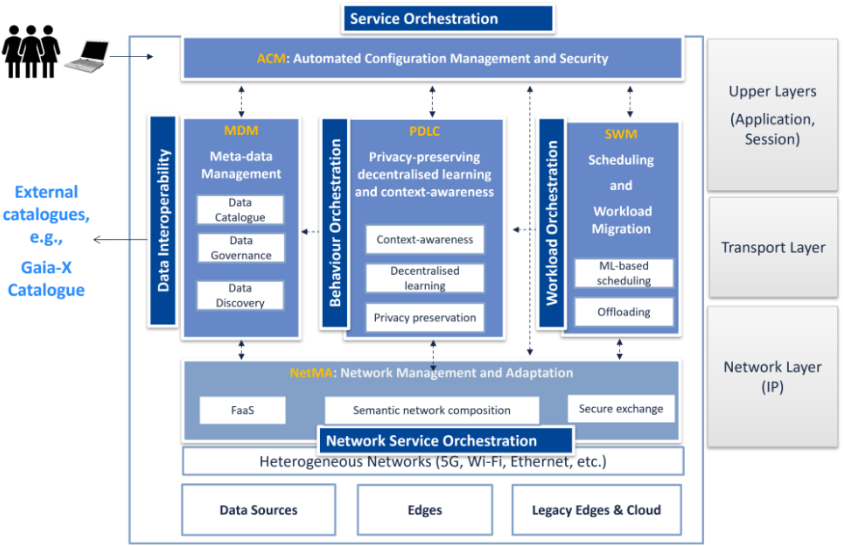

CODECO architecture

Developed by a consortium of 16 organizations, CODECO is built on five interconnected components that collectively enable intelligent, secure, and adaptive Edge-Cloud operations. Red Hat leads the development of the Automated Configuration Management (ACM) component (not to be confused with the product ACM), which is responsible for installing and deploying the entire framework in a single-cluster environment. Multi-cluster environments will be supported in the near future.

Here’s an overview of CODECO’s five core components:

1. Automated Configuration Management (ACM)

Led by Red Hat, ACM orchestrates the setup of all components and ensures seamless interconnection between Edge and Cloud. It automates installation and configuration processes, manages a graphical user interface, application lifecycle management, as well as unifying monitoring and system initialization based on business and network requirements.

ACM also handles the CODECO Application Model (CAM), which extends beyond standard Kubernetes deployments. Typically, these deployments focus on resource allocation and basic networking. CODECO has reinvented the approach by giving developers the ability to declaratively specify nonfunctional properties into the application spec.

2. Metadata Management (MDM)

MDM supports real-time Edge and Cloud-Edge operations through a semantic data catalog, enabling compliance, data discovery, and orchestration. It continuously updates itself with dataset metadata to facilitate efficient storage and data movement across distributed systems.

3. Privacy-preserving Decentralized Learning and Context awareness (PDLC)

PDLC integrates context awareness, privacy preservation, and decentralized learning. A dedicated agent monitors system conditions and provides inputs for optimization, enabling dynamic adjustment of computation and networking resources through a distributed learning submodule. PDLC employs AI/ML techniques, including Graph Neural Networks (GNNs) for spatial-temporal modeling and Reinforcement Learning (RL) algorithms that continuously learn from system behavior to make intelligent orchestration decisions. Acting as the cognitive “brain” of the CODECO platform, the RL model processes multi-dimensional data from nodes, pods, and context-aware metrics to generate optimized CRDs (Custom Resource Definitions) that guide resource allocation and workload placement across the Edge-Cloud continuum.

4. Scheduling and Workload Migration (SWM)

SWM handles the deployment, monitoring, and migration of containerized workloads within the cluster. Using real-time context indicators (e.g., latency, energy efficiency, user quality of experience), SWM ensures optimal placement and resource usage for an application onto a cluster.

5. Network Management and Adaptation (NetMA)

NetMA automates interconnectivity between Edge and Cloud systems. It utilizes ALTO (Application Layer Traffic Optimization), leverages AI/ML for predictive behavior, and supports secure data exchange, semantic interoperability, and software-defined network functions.

Together, these components create a resilient, intelligent Edge-Cloud management ecosystem, with the single-cluster setup serving as a foundational step toward future multicluster deployments.

Real-world applications of CODECO

To fully understand the impact and versatility of CODECO, here are three powerful use cases enabled by the CODECO framework, demonstrating its capabilities:

Smart monitoring of public infrastructure

In partnership with the City of Göttingen, CODECO integrates with the city’s IoT infrastructure and mobile Edge nodes (e.g., sensors on buses, AI-enabled cameras at traffic lights, and internal positioning sensors) to monitor infrastructure such as roads, traffic, and public buildings in real time.

Key elements include:

- A crowdsensing Smart App (This app employs privacy-by-design principles, i.e., data stays on users’ devices and is anonymized.)

- On-bus analytics and data fusion (powered by PDLC)

- Adaptive networking and content offloading (handled by ACM, NetMA, and SWM)

The outcome: Improved infrastructure planning, and real-time citizen feedback through decentralized Edge computing leading to an overall enhanced Quality of Experience (QoE).

Vehicular digital twin for safe urban mobility

This use case focuses on increasing safety for Vulnerable Road Users (VRUs) via a “Vehicular Digital Twin” deployed over a 5G-enabled Edge infrastructure.

Key technologies include:

- Real-time data (from roadside units (RSUs) and urban sensors)

- Predictive analytics and warning systems (using distributed AI/ML)

- ACM (which automatically deploys CODECO components onto the edge)

The result is a context-aware, real-time safety system that can anticipate and mitigate road hazards before they occur.

Decentralized media delivery streaming

CODECO is also applied to optimize media content distribution (video, gaming, AR/XR) across decentralized Edge-Cloud clusters.

Key highlights include:

- Use of the NetMA component (with a decentralized ALTO protocol extended to provide far edge / cloud support).

- Orchestration of virtual delivery points (for optimal network and compute resource utilization)

- Dynamic instantiation of services (based on real-time demand and network status)

The key benefit is a joint optimization of computational and networking resources, leading to better performance and lower infrastructure costs for media streaming.

Red Hat’s role in CODECO

Red Hat leads the CODECO Automated Configuration Management (ACM) component, which is central to both the deployment of the CODECO framework and the custom applications built on it. Serving as the primary entry point to the CODECO platform, ACM is responsible for framework configuration and deployment, user interfacing, application lifecycle management, and monitoring coordination.

Framework deployment

CODECO operates as a cloud-native Kubernetes Operator built with the Operator SDK framework, offering dual deployment modes for different infrastructure scenarios. During single-cluster operation, we leverage CODECO’s streamlined yet powerful deployment orchestration through a deploy command that triggers an intricate arrangement of pre-deployment repository cloning, dependency resolution, and post-deployment component integration. Behind this interface lies a complex automated workflow where pre-deploy scripts clone and prepare five major component repositories (SWM, MDM, PDLC, NetMA, and monitoring infrastructure), while post-deploy scripts orchestrate the sequential installation of distributed systems including Kafka message brokers, Neo4j graph databases, Prometheus monitoring stacks, CNI networking layers, and AI/ML inference engines—all seamlessly integrated through the operator’s intelligent dependency management and configuration automation.

As for the multicluster operation, we introduce Open Cluster Management (OCM) to dynamically apply components across clusters as they are needed. To do this, we use the OCM Policy Framework coupled with the OCM Policy Generator.

Open Cluster Management (OCM)

Open Cluster Management (OCM) is an open source, community-driven project designed to simplify the management of multiple Kubernetes clusters across various environments, including on-premises data centers and public or private clouds. It employs a hub-and-spoke architecture, where a central “hub” cluster controls and oversees multiple “managed” or “spoke” clusters.

A critical requirement for CODECO is consistent, dynamic deployment of components across all or select managed clusters. To satisfy this requirement, we introduced the OCM Policy Framework. The framework delivers this by providing centralized policy-driven orchestration with automated enforcement and self-healing capabilities. Through PolicySets and placement rules, OCM enables “deploy once from hub, distribute everywhere” functionality, where CODECO components are automatically deployed to targeted managed clusters via declarative policies.

OCM Policy Framework

The framework ensures desired state maintenance through continuous compliance monitoring and automated remediation, while placement bindings enable selective targeting of specific cluster groups based on labels and requirements. This approach guarantees consistent configuration across the entire Edge-Cloud continuum. It automatically applies to newly joined clusters and provides self-healing when components drift from their desired state. This makes it ideal for CODECO’s cognitive orchestration requirements across heterogeneous multi-cluster environments.

Installing the OCM Policy Framework on the managed clusters, in CODECO’s case, allows us to create policies and deploy them on the hub. Once the policies are on the hub, the policies will then be read and acted on. In our case, policies will be configured to be sent to all managed clusters rather than one, ensuring consistency across the environment.

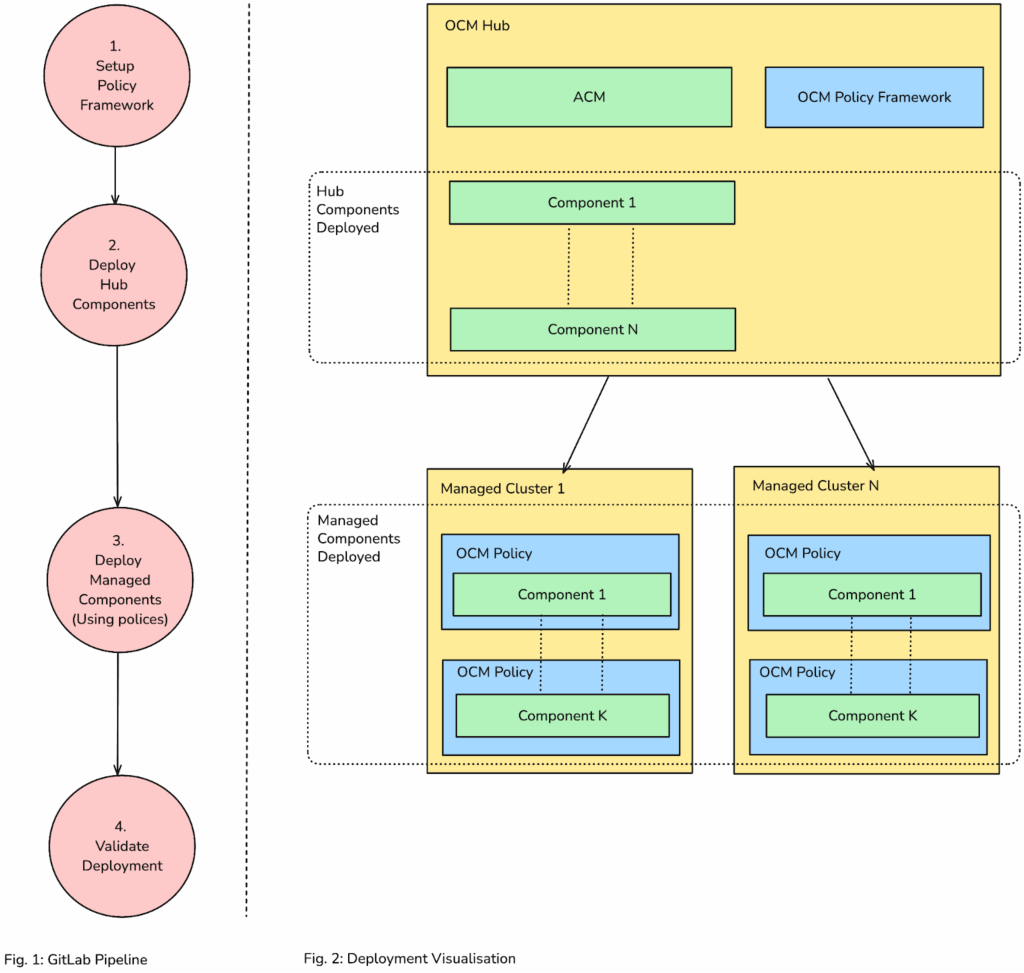

Finally, to automate this multicluster deployment we implement a GitOps CI/CD driven approach, as illustrated below:

Figure 1 shows the GitLab pipeline, which deploys the CODECO framework. The first stage of the pipeline applies the required configuration to the OCM Hub in order to support the framework deployment. In particular, this includes the integration of the OCM Policy Framework with our multicluster OCM instance. The second stage is to install the required CODECO framework components (component1… componentN) onto our OCM Hub cluster using ACM. This is implemented through referencing a hub-deployment script in our ACM operator. In our future work, we plan to integrate Red Hat’s operator Lifecycle Manager to give our operator a more production-ready deployment architecture. Once our hub is configured, we proceed to the third stage, setting up the necessary framework components across the managed clusters (Managed Cluster 1 … Managed Cluster N). Here we create OCM policies to deploy the components (component1… componentK), using the policy generator add-on. The policies allow us to deploy all the components across every managed cluster registered to the hub. Finally, in stage 4, we run a validation check to show the CODECO administrator whether the deployment has been successful or not.

Figure 2 shows the result of running that pipeline, visualizing the deployment. It shows an OCM Hub with a user-specified number (N) of managed clusters (Managed Cluster 1 … Managed Cluster N) connected to it. All Hub Components (component1… componentN) are applied and configured through ACM. All managed cluster components (component1… componentN) were applied through OCM Policies.

Application deployment

ACM serves as the primary interaction point for users through the CODECO Application Model (CAM). CAM acts as an enhanced application descriptor, allowing users to define both functional requirements and non-functional attributes such as Quality of Service (QoS) classes (Gold, Silver, BestEffort), security levels (High, Good, Medium, Low, None), compliance levels, energy consumption limits, and failure tolerance preferences. ACM also provides a graphical user interface that makes it simple for users to deploy and manage CODECO applications.

Once an application deployment request has been created by the user, ACM parses the application specification via its Kubernetes operator and coordinates with other components to determine the most suitable clusters for deployment. The SWM component then executes the deployment and, if necessary, manages workload migration across single- or multi-cluster environments.

Beyond deployment, ACM takes charge of the entire application lifecycle. It orchestrates application updates, manages removal based on user input, and leverages federated monitoring tools like Prometheus and Thanos to continuously track application health and performance. All metrics and feedback are then presented back to the user through CAM, ensuring visibility and control at every stage of the process.

The example below illustrates a CODECO Application Model. Users define their application and microservice requirements in the Spec section. ACM collects runtime information through the CODECO monitoring framework and records it in the Status section. A CODECO application is composed of multiple microservices linked via service channels. The Status section provides detailed insights into both application and infrastructure performance. Metrics are collected across multiple layers including pod-level, node-level, and service-level and are pushed to CAM to reflect the operational state of deployed CODECO applications.

Strategic value to Red Hat

Participating in CODECO offers significant benefits to Red Hat:

Open source contributions

CODECO being entirely open source allows Red Hat to actively contribute improvements upstream to its foundational technologies, including Red hats’s ACM (RHACM). This direct engagement fosters innovation, strengthens the open source ecosystem, and ensures that Red Hat’s products remain at the forefront of Edge-Cloud advancements, benefiting the wider community and, ultimately, Red Hat’s own offerings.

Product exposure

Leading the Automated Configuration Management (ACM) component (built on Red Hat’s ACM) positions Red Hat at the strategic core of CODECO. This leadership role enables Red Hat to significantly influence key technology decisions within the consortium, driving greater adoption and integration of its upstream solutions across the 16 participating organizations. This not only expands Red Hat’s market reach but also validates its technological leadership in the burgeoning Edge-Cloud space.

EU-funded innovation

This project is funded by the European Union Horizon Program. The program’s consortium-based model offers Red Hat a unique opportunity to engage in high-impact innovation with collaborators across Europe. This has enabled Red Hat to participate in cutting-edge research and development within the CODECO project team, exploring novel solutions and refining existing technologies. This commitment to collaborative innovation further solidifies Red Hat’s competitive edge.

Looking ahead: multicluster CODECO

As CODECO progresses into its next phase, multicluster deployment, we are tackling new challenges in orchestrating applications across distributed edge environments. We’re exploring novel uses of open source technologies and working to integrate those learnings back into the community.

We recently presented our vision for advancing ACM at DevConf.CZ—check it out here to see how we’re pushing the boundaries of Edge-Cloud orchestration.

Stay tuned as CODECO continues to evolve, shaping the future of decentralized, intelligent Edge-Cloud computing. For more information on the project, check out the CODECO website and CODECO code base.

Funded by the European Union under Grant Agreement No. 101093069. Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union or European Research Executive Agency. Neither the European Union nor the granting authority can be held responsible for them.

This blog was previously published on Red Hat’s internal Product and Global Engineering here. It is republished here with minimal changes.