The EU Horizon project CODECO aims to provide smoother and more flexible support of services for distributed workloads across the edge-cloud continuum. Here’s what researchers discovered about multicluster networking solutions.

The shift towards microservices has redefined how modern applications are built and run. With this architectural style, developers can break down monolithic systems into smaller, independently deployable services. Containers have further accelerated this transformation by offering lightweight, portable environments to host these services efficiently.

Yet containers alone are not enough. They need orchestration: automated tooling that handles scheduling, scaling, networking, and fault recovery. That’s the function of Kubernetes, which is used by over 84% of organizations in production or evaluation, according to a Cloud Native Computing Foundation’s (CNCF) survey. Kubernetes is flexible by design, capable of supporting both single-cluster and multicluster configurations, but a growing number of organizations are adopting multicluster architectures to meet increasing demands. Multicluster environments introduce new layers of complexity, especially around communication. Microservices deployed across clusters—possibly spanning public and private clouds or even on-premises infrastructure—must be able to communicate with each other reliably and securely.

Today, the ecosystem offers several open source tools to tackle this challenge. Yet, as highlighted in CNCF’s Tech Radar, no single solution has emerged as the industry standard. This is where our work comes in: we conducted a hands-on evaluation of three prominent tools—Skupper, Submariner, and Istio—to better understand their networking performance in multicluster Kubernetes deployments.

In this article, we’ll explore the trade-offs and performance characteristics of each tool, helping developers, DevOps engineers, and platform teams make informed decisions about the right fit for their infrastructure. This article is based on the work accepted for publication as part of the industry track of the 2025 International Conference on Performance Engineering (ICPE), held in Toronto, Canada. ICPE, jointly organized by ACM and SPEC, is the premier venue for advancing the state of the art in performance engineering, with a strong focus on bridging academic research and industrial practice.

Our work was conducted as part of the CODECO project, a consortium of 16 partners across Europe and its associated states, Israel, and Switzerland, and funded by the European Union. The overall aim of CODECO is to contribute to smoother and more flexible support of services across the edge-cloud continuum via the creation of a novel, cognitive edge-cloud management framework. Efficient multicluster networking enables seamless communication between distributed workloads, regardless of their physical or administrative boundaries, ensuring low-latency data exchange, service discovery, and dynamic workload placement across the continuum.

From simplicity to scale: the challenge of cross-cluster communication

Kubernetes environments can be deployed in two common configurations: single cluster and multicluster. For many teams just getting started, a single cluster is often enough. All workloads run in the same environment, managed by a single control plane. This setup makes deployment, scaling, and monitoring straightforward and it keeps interservice communication fast and efficient. It’s a clean, centralized way to get things done.

But as applications and teams grow, single clusters start to show limits. Larger workloads can lead to resource contention, and a single point of failure might bring down critical services. What’s more, complex use cases—such as data sovereignty, geographic distribution, or isolated dev/staging/prod environments—often require stronger guarantees around separation, resilience, and compliance.

In a multicluster setup, organizations deploy multiple Kubernetes clusters across different regions, clouds, or datacenters. This architecture supports greater scalability and fault isolation. For instance, if one cluster fails, others can continue running unaffected. Clusters can be geographically distributed to reduce latency for end users or tailored to comply with specific regulatory requirements, such as hosting sensitive data in private clusters while deploying front-end services publicly. According to the CNCF’s annual survey, nearly 43% of organizations now operate in hybrid cloud environments that mix private and public infrastructure. This underscores a trend: multicluster deployments are becoming not just an option but a necessity for many enterprises.

In single-cluster deployments, services communicate with each other directly, with Kubernetes handling DNS, load balancing, and service discovery behind the scenes. In multicluster environments, things get trickier. Services need to communicate across boundaries—sometimes between public cloud regions, sometimes across private networks. Traditional workarounds such as VPNs or firewall exceptions are brittle, hard to scale, and don’t always play well with Kubernetes-native tooling.

Multicluster networking solutions aim to simplify and secure cross-cluster communication without requiring extensive manual configuration. In the following sections, we’ll explore how these tools work, what trade-offs they introduce, and how they perform in real-world scenarios.

Exploring the options: multicluster networking solutions

To bridge the networking gap between Kubernetes clusters, several tools have emerged, each with its own architecture, strengths, and trade-offs. The open source solutions we chose to evaluate—Skupper, Submariner, and Istio—all support multicluster communication but take very different paths to get there.

Skupper: lightweight and developer-friendly

Skupper takes a unique approach by creating a Virtual Application Network (VAN) at the application layer. It uses Layer 7 application routers to connect services across namespaces in different clusters—no VPNs, no changes to application code, and no need for cluster administrator privileges. This makes Skupper particularly appealing for developers who want to connect services quickly and securely.

What stands out:

- Simple CLI-based setup with minimal permissions

- Supports HTTP/1.1, HTTP/2, gRPC, and TCP

- Uses mTLS for secure inter-cluster communication

- Built in redundant routing for better fault tolerance

Skupper operates at the namespace level, making it a good fit for loosely coupled services or specific cross-cluster workloads, though it may not offer the same depth of traffic control as service meshes.

Submariner: cluster-level connectivity via secure tunnels

Submariner works at a lower level in the network stack (Layer 3), creating direct encrypted tunnels between Kubernetes clusters. It’s designed for cluster administrators who need flat network topologies, letting services in different clusters communicate as if they were on the same network. Submariner uses IPSec with Libreswan by default, but it also supports alternatives like WireGuard and VXLAN for different performance or security requirements.

Why you might choose Submariner:

- Cluster-level communication without modifying application logic

- Flexible backend tunneling protocols

- Works across cloud providers (AWS, Azure, GCP)

- Simple to set up and manage with the right permissions

However, Submariner’s design assumes administrative control and access to cluster internals, and its security policy features are more limited compared to a service mesh.

Istio: full-service mesh with deep observability

Istio is an advanced service mesh primarily used for intracluster communication. It is capable of encompassing multiple clusters in a single mesh. Operating at Layers 4 and 7, Istio provides advanced traffic routing, observability, and policy enforcement. It’s designed for teams that want deep control over microservice behavior, both inside a cluster and across multiple clusters.

Setting up Istio in a multicluster configuration requires more work (especially for on-prem environments, which need additional components like MetalLB), but the payoff is strong: consistent security policies, zero-trust architecture, and integrated metrics and telemetry.

Key capabilities:

- Traffic shaping and fault injection

- Centralized observability and telemetry

- mTLS and fine-grained access policies

- Support for multiple deployment modes (sidecar and ambient)

Istio is powerful but comes with a higher configuration overhead, making it best suited for environments with dedicated platform teams or DevOps maturity.

Testbed and workload

Our experiments were conducted on two bare metal Kubernetes clusters, each configured with five Dell PowerEdge R640 servers (three control-plane and two worker nodes) running Red Hat OpenShift 4.16 based on Kubernetes 1.29. Each server was equipped with 40 Intel Xeon Gold 6230 CPUs, 376 GiB RAM, SSD storage, and 25 Gb RDMA network interfaces. Networking was managed by the OVN-Kubernetes CNI plugin.

To ensure performance stability and accurate measurements, components of the multicluster networking solutions were pinned to a dedicated gateway node, while all performance tests ran on a separate data-plane node. CPU cores were isolated through a Performance Profile and carefully allocated to minimize interference.

Submariner was configured with one cluster as the hub and the other as a spoke. All solutions were deployed with their default settings to represent typical usage scenarios. Power consumption was monitored using Kepler, which collects CPU and system-level metrics to estimate energy usage.

Architecturally, Skupper operates at Layer 7 by creating a virtual application network that transparently routes traffic through a dedicated router pod. Submariner uses Layer 3 routing with daemon agents and gateway nodes to forward encrypted traffic between clusters. Istio was deployed in multi-primary mode with sidecar proxies, routing traffic through east-west gateways.

For performance evaluation, we employed two primary methodologies:

Layer 4 Performance: Evaluated using uperf with varying message sizes and thread counts. Two test profiles were used: a continuous data stream to measure throughput and a request-response pattern to measure transactions per second (TPS). Each test lasted 60 seconds and was repeated five times to ensure consistency.

Layer 7 Performance: Measured using wrk2, which issued constant-rate HTTP GET requests to Nginx servers deployed across clusters. This setup simulated realistic web traffic with fixed request sizes, matching the durations and repetitions of the Layer 4 tests.

Performance and resource overhead evaluation of multicluster networking solutions

We first established a baseline for network performance without any multicluster solutions, using Clusternet and hostnet in Kubernetes. Both showed similar peak throughput, reaching approximately 22 Gbps for both intra- and inter-cluster communication. TPS peaked highest for intra-cluster hostnet at about 240k, with performance decreasing as message sizes increased.

Next, we evaluated the three multicluster networking solutions on throughput, latency/TPS, and resource consumption.

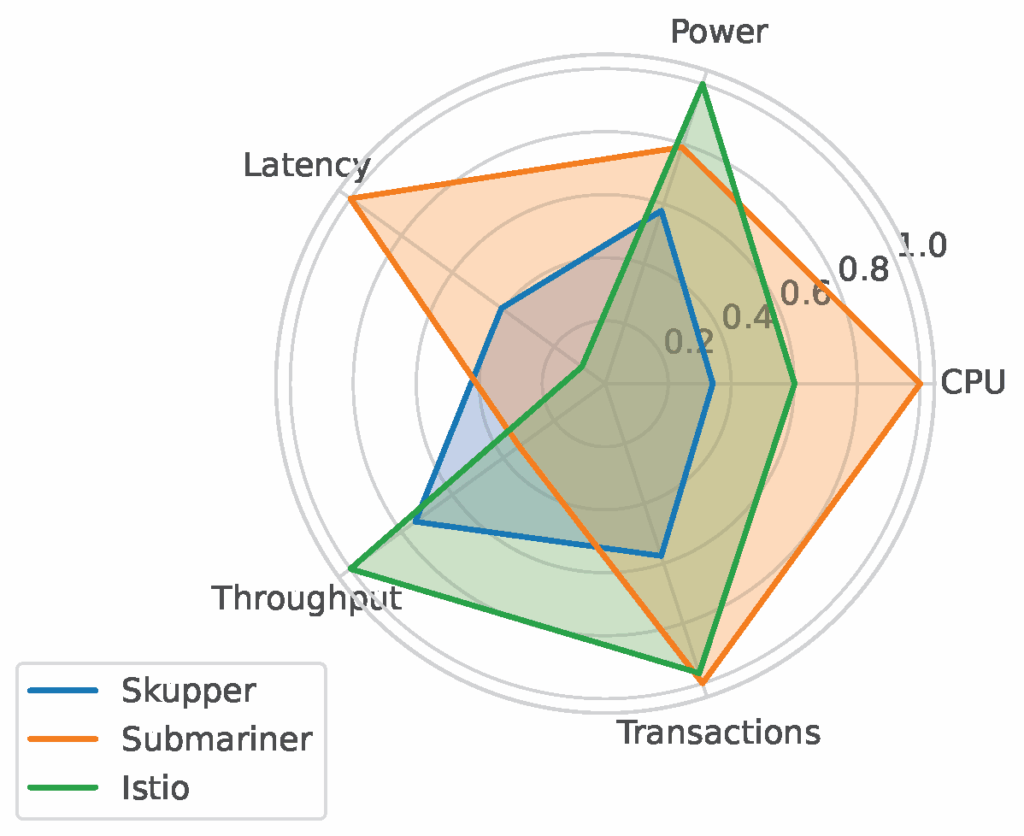

Throughput: Skupper was limited by an internal bottleneck, achieving a peak throughput around 8 Gbps. Submariner’s throughput was constrained by IPSec single-core encryption to approximately 2.6 Gbps. Istio, in contrast, achieved the highest throughput among the solutions at nearly 15 Gbps. All solutions demonstrated reduced throughput compared to the baseline, with Istio performing best and Submariner showing the lowest.

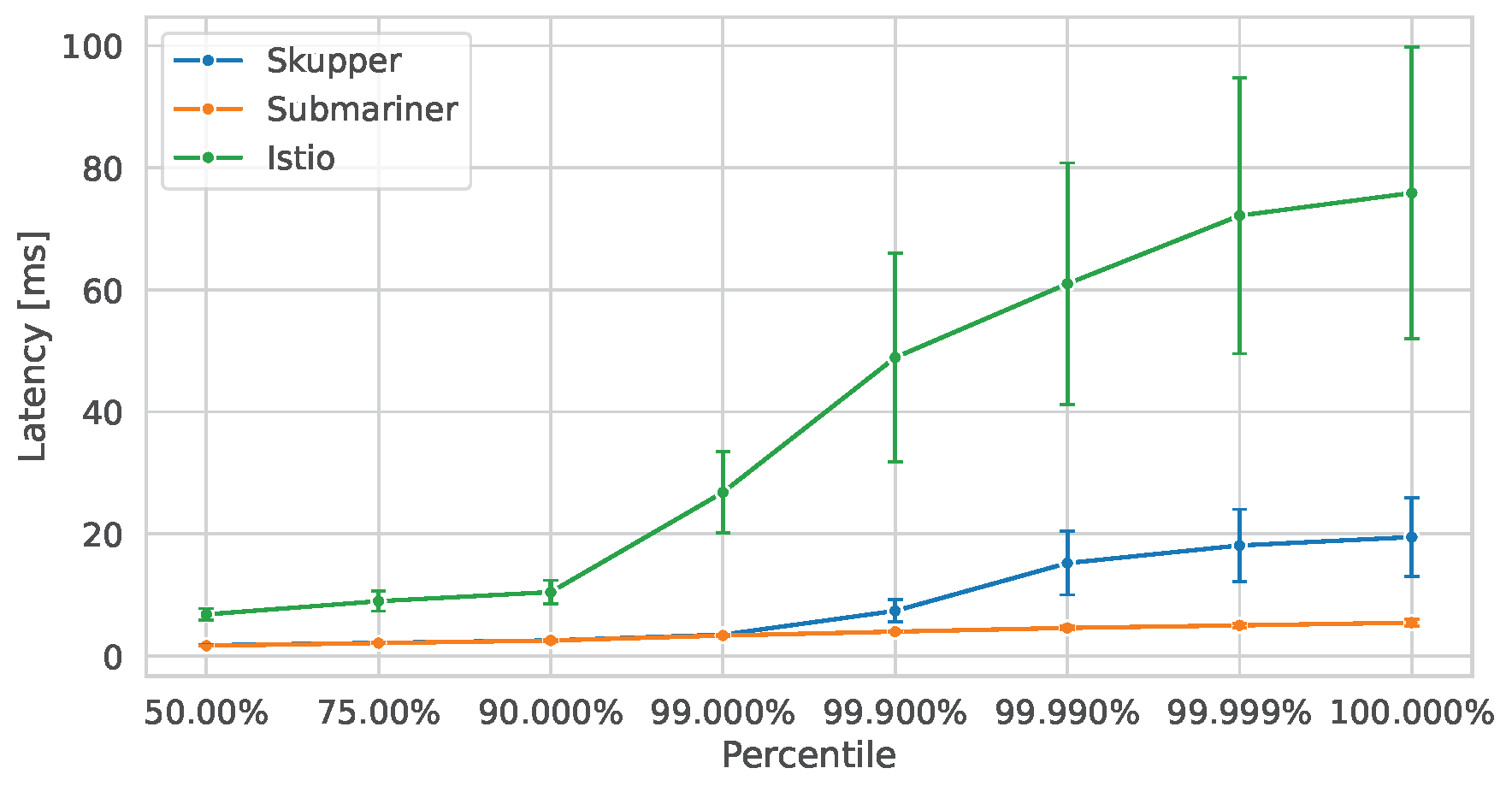

Latency and TPS: Request-response tests revealed a significant drop in TPS when these solutions were introduced. The baseline achieved around 88k TPS, while Skupper, Submariner, and Istio achieved roughly 14k, 15.5k, and 23.5k, respectively. Submariner consistently delivered the lowest latency overall. Istio, while offering higher throughput, exhibited the highest and most variable latency, especially at the tail end (99th percentile and above). Skupper’s latency was close to Submariner’s up to the 99th percentile but increased sharply afterward.

Resource consumption (CPU and power): CPU usage was highest for Skupper, peaking at 11 cores. Submariner used the least CPU, around 4 cores, indicating its efficiency. Istio’s CPU consumption was more variable but generally moderate. Power consumption followed a similar trend, with Skupper consuming the most power and Istio the least. When normalizing throughput by CPU usage (i.e., efficiency), Istio still led, but Submariner outperformed Skupper, highlighting its resource efficiency despite lower absolute throughput.

Key findings

Submariner offered the lowest latency and least CPU overhead, making it ideal for consistent, latency-sensitive applications. However, it had the lowest throughput due to IPSec constraints. Istio achieved the highest throughput, with moderate resource usage. But it showed high latency variability, especially under multi-threaded loads. Skupper provided balanced performance with a simple setup but had the highest CPU and power consumption.

Each tool demonstrated clear trade-offs:

- Submariner: Low latency, low resource use, but limited throughput.

- Istio: High throughput, strong feature set, but higher latency and complexity.

- Skupper: Easy to deploy, moderate performance, but resource intensive.

Directions for research

While this study focused on evaluating the multicluster networking data plane using micro-benchmarks to assess key performance indicators such as throughput and latency, several important avenues remain for future exploration. To better reflect real-world usage patterns, we plan to extend our evaluation to include application-level benchmarks involving messaging systems, distributed databases, and microservices-based applications. This will provide deeper insights into how each solution performs under realistic workloads and operational scenarios.

Additionally, our current work did not assess the control plane, which plays a critical role in multicluster environments. Future research will examine essential control plane capabilities, such as service discovery time—the latency for a service created in one cluster to become accessible from another—and service failover time. These metrics are crucial for understanding the responsiveness and reliability of each solution in dynamic, production-like environments.

Moreover, this study evaluated scenarios involving only two interconnected clusters. We plan to expand our scope to include larger topologies with multiple interconnected clusters. This will allow us to analyze scalability and the behavior of each networking solution under increasing complexity, helping to identify potential performance bottlenecks or limitations that may emerge at scale. By addressing these dimensions, we aim to provide a more holistic understanding of multicluster networking solutions and offer actionable insights to guide system architects in deploying efficient and reliable Kubernetes-based infrastructures.

Funded by the European Union under Grant Agreement No. 101093069. Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union or European Research Executive Agency. Neither the European Union nor the granting authority can be held responsible for them.