How do you charge for a cloud? Researchers at the New England Research Cloud have developed a stack to make understanding and charging for usage much simpler.

Universities and research institutions are increasingly embracing the cloud as a means to bring down costs and fully utilize the technical resources they have on hand. But creating and maintaining a cloud is not free, which leads to a question that is mundane and unglamorous but still critical: who is paying for all of this? This question is especially important to the New England Research Cloud (NERC), which provides cloud services such as OpenStack and Red Hat OpenShift to multiple member institutions, including Harvard University, the Massachusetts Institute of Technology, and Boston University.

Let’s start with the obvious and seemingly simple answer: the people using the service should be billed for it. But how do we define “use”? After all, no report in OpenShift says, “User A used six pieces of cloud this past week.” Instead, we need to look at lower-level utilization metrics, such as CPU, memory, or storage. Sum that up over a month, and you’ll have a good understanding of the amount of resources a user has consumed.

It’s not quite that easy, however, because resources are not uniform. For example, a server with a GPU is more expensive (and thus more valuable) than one without. In a cloud environment, it’s also important to account for shared usage, with the aforementioned GPU potentially being shared among multiple projects.

Solutions

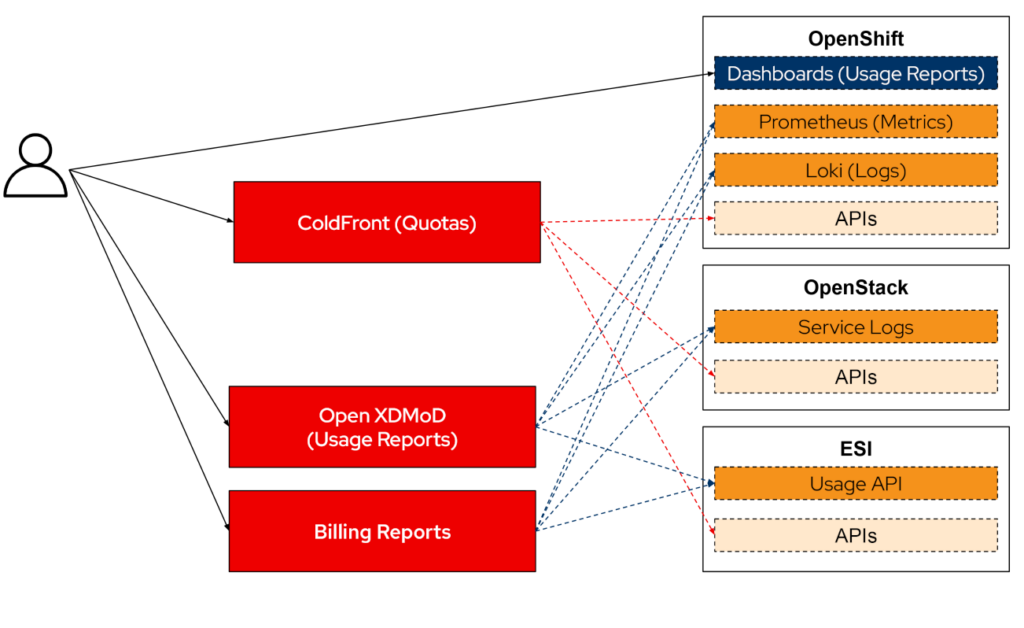

Those are some of the difficulties present when considering billing requirements. Fortunately, there are also solutions. OpenShift includes a monitoring stack that stores various utilization metrics in time series form. This stack includes Prometheus, an open source monitoring solution that allows for easy custom queries of the exact metrics we need. NERC uses Python scripts to run those custom queries, generating monthly usage reports to bill consumers.

Custom platforms under development can also be built with billing requirements in mind.

We use a different approach with OpenStack, where NERC queries for VM creation and deletion events. This allows them to create a precise view of a project’s resource consumption. Similarly, Loki provides log aggregation for OpenShift, enabling operators to sift through events to create a fine-grained picture of resource usage and availability. This allows them to detect outages, during which users should not incur charges.

Custom platforms under development can also be built with billing requirements in mind. For example, NERC is in the process of deploying a bare metal cloud using ESI, which allows projects to lease bare metal nodes. These leases are modeled so that creating usage reports is as simple as running a single command.

Visibility for users

Generating usage reports is just one-half of the picture. The other is breaking down this information to users so they can make informed choices about their usage. In NERC, this all starts with the ColdFront interface, an open source resource allocation management system that uses a plugin architecture for easy integration with the APIs provided by OpenShift and OpenStack. Through ColdFront, users can view their quotas and request additional resources.

To ease a user’s understanding of what they are requesting, NERC uses the concept of services: a collection of resources such as CPUs, storage, and external addresses that are bundled and billed together as a unit. Examples include OpenStack CPU, OpenStack GPUA100, and OpenStack GPUV100, the use of which each entitles a user to a different set of OpenStack resources.

Generating usage reports is just one-half of the picture. The other is breaking down this information to users so they can make informed choices about their usage.

It’s also important to display resource consumption data to the user. NERC’s tool of choice here is Open XDMoD, an open source tool used by NERC in the past and thus familiar to users. XDMoD contains native support for OpenStack; however, integrating with OpenShift requires a bit more finesse, involving a custom script that queries Prometheus to generate an XDMoD-compatible log file. In the long term, NERC may shift to native OpenShift solutions. OpenShift already provides dashboards that allow users to query Prometheus to view the vast number of available metrics easily. For storage reasons, these metrics are only provided in the short term, but a new OpenShift operator called Curator solves this problem with the help of the Koku Metrics operator, which condenses the raw data to a resolution more suitable for long-term reports.

The final piece of the puzzle is billing: sending out a periodic invoice to users of NERC so they know how much to pay. Currently, NERC uses a custom script to create spreadsheets that are adjusted into invoices before being emailed to each user. This isn’t sustainable in the long term, and they’ll be moving to a new tool to automate much of this workflow. This tool will have a broad set of requirements. The most obvious one is invoicing, but nearly as important is a web dashboard that gathers usage data across all NERC offerings for presentation to the user. The tool also aims to accommodate the notion of credits for new users or contributors.

Continuing development

This view into NERC’s infrastructure highlights the challenges and solutions involved in the seemingly innocuous billing requirement. And there will be more as NERC continues to grow its capabilities. The aforementioned ESI bare metal cloud is being readied; further down the road, NERC may make new types of resources available in the cloud, such as FPGAs or infrastructure suitable for the wireless edge.

There’s also the question of disconnected infrastructure, where metrics are not collected for reasons ranging from policy (a requirement not to collect data identifying students) to capability (disconnected infrastructure). All of these will require NERC to evaluate and update its billing practices so that usage charges match their maintenance costs, while also working with engineers to ensure that the data exists to charge users accurately.

A vast number of moving pieces go into this billing stack, but with that complexity comes the opportunity to work on any or all of the technology involved and potentially break new ground in metering and billing for the entire cloud industry. I’ve included links throughout this article so you can investigate further if you’re curious and potentially get involved if you feel the call!