The research uses for data could be endless, but without meeting stringent privacy requirements, some of the most promising analyses may never begin.

“Data is the new oil” is a shorthand generally credited to UK mathematician Clive Humby. The saying got considerable play when “Big Data” was the latest catchphrase around a decade ago. As some were quick to point out, Humby’s complete quotation notes that, like oil, data needs to be refined before we can actually use it.

Today, we see data refinement in the form of large language models (LLMs) and other innovations. At the same time, there’s a growing awareness of the liabilities that source data can bring to organizations, including legal action, reputational risk, and regulatory scrutiny.

Over the past few years, Red Hat Research and academic partners have been involved with projects exploring a variety of security and privacy questions related to data, including secure multiparty computation, differential privacy, confidential computing techniques (including both trusted execution environments and fully homomorphic encryption), and digital sovereignty. This article reviews these technologies and provides some updates.

Secure multiparty computation

Secure multiparty computation (MPC) is a cryptographic protocol that allows multiple parties to jointly compute a function over their inputs without revealing their private data to each other. It is a technique for secure distributed computing that enables parties to collaboratively analyze data without compromising its confidentiality or privacy.

MPC is based on the idea of secret sharing, which involves dividing a secret into multiple shares and distributing them among the parties. Each party has a share of the secret, but no single party has enough information to reconstruct the secret on its own. This ensures that the parties cannot learn each other’s private data, even if some of them are colluding with each other. Essentially, MPC replaces a trusted third party with a cryptographic protocol.

A concrete past example of using this technique comes from research led by Boston University’s Azer Bestavros. It used payroll data from Boston-area companies that was securely collected and redistributed for wage-gap analysis without any company having access to the dataset as a whole. (See also “Conclave: secure multiparty computation on big data” and “Role-based ecosystem for the design, development, and deployment of secure multiparty data analytics applications.”) Work has also begun in the Red Hat Collaboratory at Boston University on developing a unikernel implementation of Secrecy, a relational MPC framework for privacy-preserving collaborative analytics as a service.

Differential privacy

Data from healthcare records can be a considerable boon for scientific research. However, patient data has enormous privacy implications. Even if an organization using the data for research is generally considered trustworthy, data leaks happen all the time.

The obvious solution is to anonymize the data. But this turns out to be surprisingly hard.

The obvious solution is to anonymize the data. But this turns out to be surprisingly hard. It’s not always clear what can be used to identify someone and what can’t—especially once you start correlating with other data sources, including public ones. A widely published story from the 1990s tells how Latanya Sweeney, an MIT graduate student, was able to identify a supposedly anonymized healthcare record as belonging to then Massachusetts governor William Weld after he collapsed at a local event merely by correlating it with voter records.

Organizations like the US Census have long had to deal with the challenges of publishing large numbers of tables that cut up data in many different ways. Over time, there’s been a great deal of research into the topic, which has led to the creation of various guidelines for working with data in this manner. One of the problems with traditional anonymization methods is that it’s often not well understood how successful they are at actually protecting privacy. Techniques that collectively fall under the umbrella of statistical disclosure control are often based on intuition and empirical observation.

However, in the 2006 paper “Calibrating noise to sensitivity in private data analysis,” Cynthia Dwork, Frank McSherry, Kobbi Nissim, and Adam D. Smith provided a mathematical definition for the privacy loss associated with any data release drawn from a statistical database. This approach brought more rigor to the process of preserving privacy in statistical databases. It’s called differential privacy (specifically ε-differential privacy). A differential privacy algorithm injects random data into a data set in a mathematically rigorous way to protect individual privacy.

Because the data is “fuzzed,” to the extent that any given response could instead have plausibly been any other valid response, the results may not be quite as accurate as the raw data, depending upon the technique used. But other research has shown that it’s possible to produce very accurate statistics from a database while still ensuring high levels of privacy.

Differential privacy remains an area of active research. However, the technique is already in use: the Census Bureau used differential privacy to protect the results of the 2020 census.

Trusted execution environments

Other techniques focus more on cloud computing, where data is inherently out of an organization’s direct control. Cryptographic techniques that protect stored and in-transit data are well established. But how about data that is currently being used?

One technique is Trusted Execution Environments (TEE). This form of confidential computing uses secure enclaves within a processor that provide isolation and protection for sensitive data and computations. TEEs are typically implemented using hardware-based security features to create a secure environment isolated from the rest of the system. This isolation ensures that sensitive data and computations cannot be accessed or tampered with, even if the rest of the system is compromised.

A great deal of work in this area is taking place in the Confidential Computing Consortium (CCC), a project community of the Linux Foundation. There are currently seven projects under the CCC umbrella.

Fully homomorphic encryption

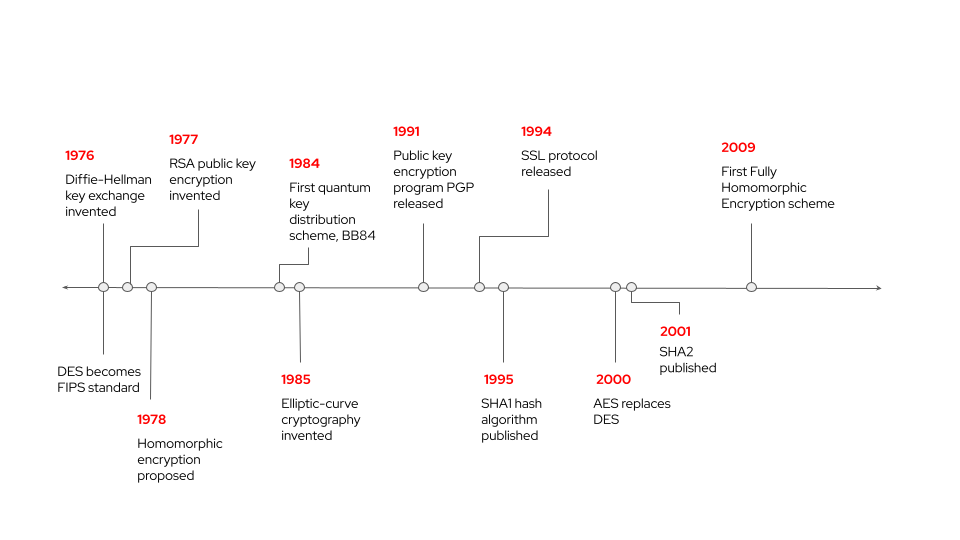

A second confidential computing technique is fully homomorphic encryption (FHE). It lets a third party perform complicated data processing without being able to see the data set itself. Homomorphic encryption is essentially a technique to extend public-key cryptography and was in fact first mentioned shortly after the RSA cryptosystem was initially invented.

A big challenge for FHE is that the technique is very expensive computationally and is mostly not practical yet. However, if realized, it would provide an additional level of protection against data leaks when using a public cloud or other service providers to analyze data sets.

One approach to this challenge has been investigated in a project at the Red Hat Collaboratory: using FPGAs to accelerate operations (see Rashmi Agrawal and Lily Sturmann, “Preserving privacy in the open cloud: speeding up homomorphic encryption with custom hardware,” in RHRQ 4:3). CPUs aren’t good at exploiting the parallelism of FHE algorithms. GPUs are better but their floating-point units end up sitting idle. Neither can effectively meet the high memory bandwidth requirements of FHE workloads. FPGAs, on the other hand, map well to the requirements while being cheaper and more future-proof than custom ASICs.

The project’s long-term vision is to design a practical, efficient hardware accelerator supporting all four of the homomorphic encryption schemes under consideration by the International Organization for Standardization, and then deploy it in the open cloud to enable privacy-preserving computing systems in the Red Hat Collaboratory.

Digital sovereignty

The final topic isn’t a specific technology—though technology will play into some solutions—so much as it’s about geopolitical trends and concerns.

Even allies may adopt security and data regulations that are unfriendly to certain business models.

Shifting global regulations are creating a requirement for workload placement with greater control over security and data as well as avoiding dependencies on organizations in countries that are hostile to varying degrees or may become so. Even allies may adopt regulations that are unfriendly to certain business models. The Capgemini Research Institute notes that the issues “are not new and have been gaining impetus over the past few years. However, it is a subject that is now under increasing scrutiny because of rising geopolitical tensions; changing data and privacy laws in different countries; the dominant role of cloud players concentrated in a few regions; and the lessons learned through the pandemic.”

The pursuit of digital sovereignty is a complex and evolving issue, and there is no one-size-fits-all approach. Countries will need to find their own unique path to achieving digital sovereignty, taking into account their own specific circumstances and priorities. There will certainly be domino effects. As it gains momentum, the effects on hyperscalers, local partnering requirements, and converged observability across clouds will all be open questions, as will the trajectory of regulatory regimes.

Further reading

You can explore all Red Hat Research projects related to privacy in the database on our website (research.redhat.com). RHRQ explored this issue in past articles including “How do we reconcile privacy with machine learning?” (RHRQ 1:2, 2019) and “Voyage into the open dataverse: an interview with James Honaker and Mercè Crosas” (RHRQ 2:2 2020).