Fully homomorphic encryption could be a great solution for secure data sharing, if only it weren’t so slow. Could an FPGA accelerator be the answer?

Protecting sensitive data from being seen or tampered with, either while it is stored or while it is in transit, has been standard for some time. This practice is especially relevant in cloud computing, which allows access to far more computing power and scalable resources, but involves infrastructure the user does not control, may not be able to access, and therefore may not fully trust.

In recent years, more emphasis has also been placed on protecting data while in use. When this protection includes protection from being seen, it is often referred to as confidential computing. Today, in practice, most confidential computing is done in Trusted Execution Environments (TEEs), in which the CPU enforces the isolation of a region of memory for the sensitive computation. In this memory region only, the data is decrypted for use. Fully Homomorphic Encryption (FHE), by contrast, is a method of confidential computing that goes beyond TEEs and allows for computation on ciphertext while all data remains fully encrypted. FHE has the potential to open a range of new possibilities for confidential computing in medical, financial, marketing, research, and other fields. By enabling computation on encrypted data, FHE encourages people to share their private data without fear of misuse and allows computation outsourcing with fewer security risks.

However, despite its promise, it is not widely used today. There remains a large performance gap between operating on encrypted data and operating on its plaintext form. A calculation that would take one second to perform using plaintext would take more than 11 days to perform using current homomorphic encryption libraries like HElib or PALISADE. This slowdown of about one million times is an unacceptable tradeoff for businesses that would otherwise be interested in FHE and remains a stubborn blocker for practical applications of this discovery.

A small research team at Boston University (BU) consisting of graduate student Rashmi Agrawal and Professor Ajay Joshi (along with collaborators from MIT) is working to overcome this limitation and enable privacy-preserving cloud computing using homomorphic encryption. By designing custom hardware accelerators for FHE, they have shown that it is possible to sidestep the biggest obstacle in this field: the performance gap.

An exciting and challenging history

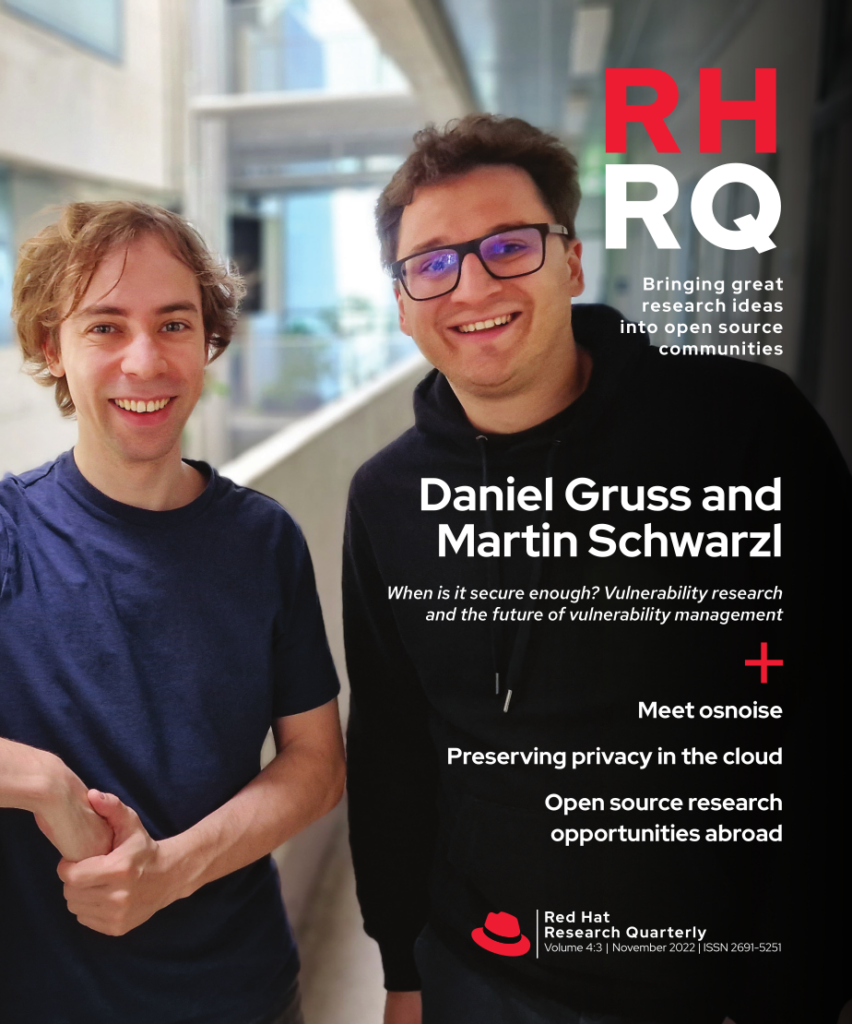

Homomorphic encryption (HE) is a broad term that describes cryptographic advancements to allow computation on encrypted data. The history of homomorphic encryption, of which FHE is the most powerful subset, spans almost a half-century. The idea of HE was proposed in 1978 by Ronald L. Rivest, Len Adleman, and Michael L. Dertouzos of MIT, two of whom are also known as co-inventors of the RSA algorithm. Over the following decades, as new encryption schemes were developed, they were tested for their ability to support HE operations, but they supported them only partially at best. For example, the Paillier cryptosystem supports only additive homomorphism, while Elgamal and RSA encryption schemes support only multiplicative homomorphism. In other words, only addition operations or only multiplication operations can be performed fully encrypted, which limits their real-world applications.

A breakthrough came in 2009 when a graduate student named Craig Gentry described the first feasible construction of an HE scheme that enables both addition and multiplication operations on encrypted data, using lattice-based cryptography. Gentry initially described a scheme limited to evaluating one multiplication and a few addition operations, which would be classified as a Somewhat Homomorphic Encryption (SHE) scheme. This limitation is imposed by the noise in ciphertext generated using a lattice-based encryption scheme. The noise within the ciphertext grows as each addition and multiplication operation is performed on it. When this noise grows beyond a critical threshold level, the result of the homomorphic computation is destroyed and cannot be recovered after decryption.

In this same work, Gentry also showed how a technique called bootstrapping could overcome the noise accumulation problem. Bootstrapping helps reset the noise to a lower level so that further computations can be performed. Using this technique with his initial SHE scheme, Gentry described the first Fully Homomorphic Encryption scheme. However, bootstrapping has high latency, and this high latency remains one of the key bottlenecks in the adoption of HE.

HE vs. other techniques

As the importance of data privacy has moved to the forefront in recent years, various privacy-preserving techniques have gained prominence: multiparty computation, zero-knowledge proofs, differential privacy, and trusted execution environments. The use cases for each of these differ substantially and contrast with HE.

Mercè Crosas and James Honaker discuss their approach to multiparty computing and differential privacy in the article “Voyage into the open Dataverse,” RHRQ 2:2, August 2020.

Unlike multiparty computation and zero-knowledge proofs (both cryptographic solutions), HE requires no ongoing interactions between the parties providing data or computation. In HE, a client encrypts the data, sends it to the cloud for processing, and, after that point, can remain offline while the cloud server carries out computations. No key exchange between the client and the cloud is necessary. Once the computations are finished, the cloud can send the encrypted data back to the client. In contrast, both multiparty computation and zero-knowledge proof techniques require the client to actively participate in the process of computation and remain online for the entire duration of the computation.

With trusted execution environments like Intel SGX/TDX and AMD SEV/SNP, the data needs to be decrypted within the secure enclave before it is used for computation, requiring the data owner to share the decryption key with the server in most cases. If the cloud is not completely secure, maintaining the security of the decryption key itself could be challenging. Additionally, any attacker that can exploit the specific trusted execution environment to read the isolated memory area would also be able to read the data. However, with HE, the data owner does not need to share the secret keys with the cloud server, and any read of the memory still reveals only encrypted data.

In contrast, differential privacy is a non-cryptographic solution to data privacy. It adds random noise to the private dataset so as to conceal the actual values. This can preserve the privacy of individual data points in a mathematically rigorous way when working with aggregated data, such as US Census data. While FHE enables computation outsourcing to a third-party cloud, differential privacy is typically adopted for federated machine learning operations.

How does HE work?

In 2009, when FHE became feasible, the option to compute encrypted data with FHE was available only for Boolean operations, which describe logic gates, the building blocks of circuits. All computations were first expressed as Booleans before they could be evaluated homomorphically. Translating mathematical operations into Booleans was known as mapping to a homomorphic circuit. However, with the latest FHE schemes, conversion to a homomorphic circuit involves translating the plaintext compute model to a combination of primitive FHE operations such as addition and multiplication. In addition, depending on the specific FHE scheme, the data involved must first be encoded as polynomials.

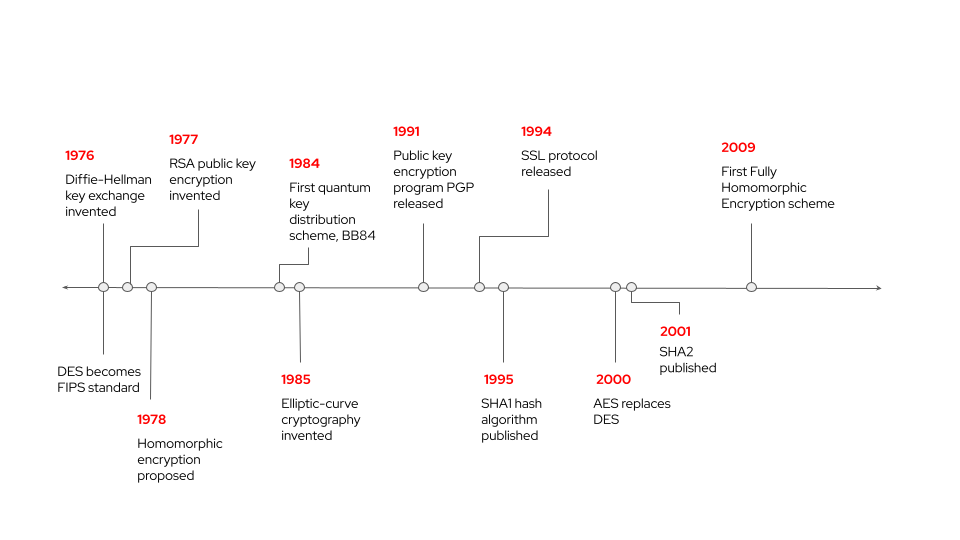

There is a hierarchy that describes HE schemes and their capabilities. The least capable scheme is Partially Homomorphic Encryption, which supports the evaluation of either addition or multiplication, but not both. (While division and subtraction are possible indirectly in HE, they are more complex, and most descriptions of HE schemes speak in terms of addition and multiplication operations.) SHE and Leveled FHE schemes are more capable intermediate categories and can compute many additions and a small, predetermined number of multiplications on ciphertexts.

FHE schemes, considered the “holy grail” of HE, allow the evaluation of multiple types of operations with no maximum on multiplication operations. In other words, they enable an infinite number of calculations on ciphertext while still producing a valid result that can be decrypted. For most existing HE schemes, the cap on multiplication operations is the main practical limitation in performing computations over encrypted data. Recall that the cap can be lifted by bootstrapping, but this introduces a very high level of latency that is unacceptable in most real-world scenarios.

Hardware accelerators

Only by narrowing the performance gap will wide-scale adoption of FHE be feasible. Existing research in the field of FHE tries to reduce the execution time of operating on encrypted data by either optimizing algorithms or the implementations themselves. To this end, several FHE acceleration efforts are based on CPUs, GPUs, and ASICs. The CPUs and GPUs have shown they cannot bridge the performance gap, and ASIC solutions are expensive, with large on-chip memories.

Unlike multiparty computation and zero-knowledge proofs, HE requires no ongoing interactions between the parties providing data or computation.

This is why Prof. Joshi’s team at BU proposed acceleration based on field programmable gate arrays (FPGAs) as one of the viable solutions that could provide practical performance for FHE while being affordable. Surprisingly, there was limited work on the acceleration of FHE schemes on FPGAs prior to their efforts, despite the obvious gap in the context of the problem space. FHE workloads are inherently parallel, but existing commodity platforms like CPUs are not good at exploiting this parallelism. GPUs are up to the challenge of exploiting the existing parallelism in FHE workloads, but they have massive amounts of floating-point compute units that remain idle since FHE operations are integer-only computations. Moreover, neither CPUs nor GPUs provide native support for modular arithmetic operations, which is the main compute bottleneck in FHE. Lastly, both of these platforms fail to meet the high memory bandwidth requirement of the FHE workloads.

On the other hand, while ASIC solutions will always have higher performance than FPGA solutions, ASIC solutions are a lot more expensive. In addition, ASIC solutions are not futureproof and will require a non-trivial amount of redesign as the FHE algorithms evolve in the future.

The performance, cost, and flexibility of FPGAs suggested to Prof. Joshi’s team that FPGAs would provide a sweet spot between CPUs/GPUs on one end and ASICs on the other for their FHE acceleration work. They determined that an FPGA solution could significantly outperform both CPU and GPU implementations of FHE. Moreover, FPGAs are highly accessible even today to the general public and can be deployed immediately for under a dollar per hour. By enabling competitive levels of performance with the same availability as a high-end GPU, FPGAs are the most viable option for near-term hardware FHE acceleration in the cloud.

Xilinx’s Alveo boards and Intel’s stratix FPGAs are already available in some cloud environments today.

Impressive speedup

In their research, the team’s first step was to choose an appropriate FHE scheme based on their underlying application of logistic regression (LR) model training, specifically an image-classification-based healthcare application. They chose the CKKS scheme (an acronym of the creators’ names) because it supports operations on floating-point data (this data is translated into polynomials requiring integer-only computations once encrypted). Using this scheme, they designed and implemented various primitive homomorphic operations for their specific application, including addition, multiplication, rotation, conjugation, key switching, and bootstrapping. All these operations were customized for their U280 FPGA implementation so that they incur low hardware-resource utilization and low latency.

FPGAs are the most viable option for near-term hardware FHE acceleration in the cloud.

The next task was mapping the actual LR application using these building blocks. For FHE-based computing, there are two critical steps to follow. First, they had to figure out a way to efficiently transform the dataset into ciphertexts according to the rules of the CKKS scheme, and second, they had to map the actual equation into what is known as the homomorphic circuit. They had to carefully consider how much on-chip data their FPGA could handle and concluded that they could pack their entire training dataset within 84 ciphertexts.

Once they were able to map the LR equations to the homomorphic circuit, they saw that it took on average 0.1 seconds to perform an iteration of training with the LR model, which is about 458 times faster than existing CPU implementations and about nine times faster than the existing GPU implementations of this same set of homomorphic operations. The FHE accelerator takes 3.09 seconds to train a logistic regression model with 30 iterations. The same training takes about 0.12 seconds on plaintext data using a CPU. Effectively, the slowdown is 26x—much less than other current HE libraries not using an accelerator, where the slowdown can be around 1,000,000x.

Future possibilities in the cloud

For the use of an FHE FPGA accelerator in the cloud, the host CPU on the cloud could offload the FHE computation, in the form of a binary file, and the encrypted data to the accelerator. The accelerator could then perform homomorphic computations by running the binary and, once the computations are complete, send the results back to the host CPU, which would return them to the client. This would be possible on any cloud hosting the appropriate FPGA shell.

From the data owner’s point of view, taking advantage of FHE in the cloud with an FPGA accelerator would be a two-step process. First, an expert would need to create the mapping from the plaintext application to the correct homomorphic circuits. Each application should use its own customized circuits to retain the performance benefits of the hardware accelerator. Second, once the circuits are ready, the data owner would encode the data into polynomials, encrypt it using existing libraries such as SEAL or PALISADE that support the CKKS FHE scheme, and upload it to the cloud. The length of time the computation would need in the cloud would depend on the application and dataset, and would need to account for the slowdown of the homomorphic computation. Once the data owner receives the encrypted results from the cloud, they would be able to perform decryption and decoding to recover the results in plaintext format.

Use cases and ongoing research

FHE is a closer fit for some types of problems than others due to HE’s dependence on mapping to polynomials and circuits in a specific manner. For example, FHE is a good fit for logistic regression machine learning models, whereas it is less of a good fit for machine learning models used for natural language processing.

Using Prof. Joshi’s team’s work, the ability of FHE to perform processing on encrypted data with a less costly slowdown has the potential to solve major business challenges faced by companies across all industries. Some of the examples include:

- Private data analytics for marketing, targeted advertising, rare disease diagnosis, genome analysis, drug research, and many more

- Regulatory compliance, such as GDPR (EU General Data Protection Regulation) and HIPAA (US Health Insurance Portability and Accountability Act)

- Private information retrieval through secure database queries

- Secure supply chain management

- Secure bioinformation authentication

The team’s work is part of the project “Privacy-preserving cloud computing using homomorphic encryption,” which was a recipient of the 2021 Red Hat Collaboratory Research Incubation Award. The team successfully deployed the hardware accelerator on an FPGA node in the Open Cloud Testbed in June 2022, and they plan to demonstrate its use to perform neural-network-based classification of encrypted image data by the end of this year.