Why open source hardware will play a key role in emerging technologies research

RISC-V Instruction Set Architecture (ISA)-based microarchitectures are an important part of all Field Programmable Gate Array (FPGA)-based research projects in the Red Hat Collaboratory at Boston University. Having CPU cores in FPGA designs is important: partitioning workloads between special purpose FPGA circuits and these general purpose cores allows for better functionality, flexibility, power consumption, and development turnaround than what could be achieved if entire designs were built using only special purpose FPGA circuits. In addition, RISC-V is our choice of ISA for these CPU cores because the ISA is open source, the toolchain is stable, and there is support for custom extensions.

Foundations of architecture

Before we get into detail, however, let’s review four fundamental aspects of the computer architecture ecosystem:

- Microarchitectures

- Toolchains

- Runtime environments

- ISAs

A microarchitecture is the organization of the actual hardware of a processor. It specifies the various hardware blocks used, their parameters/sizes, and how these blocks connect to form the processor. Microarchitectures are typically developed as code using hardware description languages (HDLs). This design can be instantiated directly on the FPGA as a so-called softcore using chip resources or fabricated and made into physical CPU chips.

A toolchain is the software needed to compile code so that it can be executed on a processor. It reduces development complexity by allowing code to be written in high-level languages while still having direct control over the hardware details. The code itself could be both the user code and the system runtime.

A runtime environment defines the execution model for a given code by constraining how software and hardware interact. This environment can be as simple as compiling code using an embedded C library such as Newlib so that the code can interface hardware directly—effectively implementing a microcontroller unit (MCU). It can also be as complicated as an operating system (OS) with process scheduling. Hardware is interfaced (indirectly) through the OS, and thus requires linking to OS libraries during code compilation.

Finally, an ISA is the glue that holds the microarchitecture and toolchain together. It is an abstraction that sits at the boundary of hardware and software and provides guarantees to both—provided, of course, that both the toolchain and microarchitecture are compliant with the ISA. To microarchitectures, the ISA guarantees that any binary built by any compliant toolchain can be correctly executed. In the other direction, the ISA guarantees that any compliant microarchitecture will have the necessary hardware required to run any binary that the toolchain generates.

Why RISC-V?

So how did we end up choosing the RISC-V ISA and its ecosystem? The first requirement was that the ISA needed to be open source. Having an open source solution is essential for enabling support for customizations to the softcore microarchitecture based on target workloads and constraints. As a result, we could not use ISAs such as ARM and x86.

The second requirement was that the ISA needed a full toolchain and a modern runtime environment. Both the toolchain and runtime need to be in active development. This excludes architectures whose tooling and runtime are outdated, such as SuperH(SH)-2 and SH-3.

Finally, the third requirement was that the ISA should provide support for easily utilizing microarchitecture extensions from code running on the software, for example, through the use of custom instructions. Given these requirements, RISC-V was the only modern ISA available and thus our default choice.

To learn more about the history of RISC-V, read “Fostering open innovation in hardware” in RHRQ issue 2:2 (August 2020).

The RISC-V toolchain has a large, active community, which results in a complete toolchain. That toolchain, available as part of the community Linux distribution Fedora or in the Fedora-derived Extra Packages for Enterprise Linux (EPEL), is feature rich and easy to modify based on requirements. Several open source RISC-V softcores are available on the microarchitecture side, with a wide range of supported functionality and resource usage. This allows RISC-V compliant softcores to be instantiated on almost any FPGA, from large datacenter-class versions with millions of look-up tables (LUTs) and thousands of digital signal processors (DSPs) to small edge-class FPGAs with a few thousand LUTs.

The RISC-V ISA has support for easily and securely extending the ISA, which effectively allows us to interface with additional IP blocks. These custom extensions enable us to streamline the division of tasks between softcores and custom hardware blocks, and they facilitate the complex and time-sensitive interactions that need to happen between them. Since the microarchitecture and custom hardware blocks are both reconfigurable, the exact division of tasks can be varied substantially and tuned for specific designs or chip/board specifications.

Why softcores?

As noted previously, softcores are CPU cores that are coded using HDLs and instantiated on FPGAs using chip resources such as LUTs, memory blocks, DSPs, and interconnects. Because the softcore can vary in features and sizes, multiple softcores can be instantiated on a single FPGA fabric, limited by the total available FPGA resources.

FPGA-based softcores cannot achieve performance comparable with application-specific integrated circuits (ASICs) because of limitations placed by the FPGA fabric, such as a lower operating frequency, reduced complexity of the microarchitecture due to limited chip resources, and older processor technologies than the current state of the art (used in fabricated ASICs). However, these limitations are significant only for performance-critical tasks, such as application data plane operations. Our goal is to use softcores to implement tasks for operations that are not performance-critical, such as those on the application control plane.

Using softcores in this manner enables two key benefits. The first benefit is that developing, compiling, and maintaining high-level language code for softcores is typically easier than writing code for custom compute hardware using an HDL. If any functionality changes, we can easily modify the source code, generate the new binary, and load it onto the FPGA—a turnaround time in the order of seconds/minutes as opposed to hours/days for hardware generation.

The second benefit is that softcores take, on average, less of an FPGA’s (limited) resources to do tasks. This is due to hardware reuse: instead of building custom hardware for each task, softcores leverage generic compute pipelines to execute multiple tasks sequentially. All softcore tasks reuse the same pipelines; thus, adding tasks incurs no additional resource costs beyond the memory needed to store new instructions. As a result, we free up resources that would otherwise have been used to implement custom hardware for non-performance-critical parts of the workload. These resources can then be used to scale up existing performance-critical hardware blocks or add more functionality.

Softcores take, on average, less of an FPGA’s (limited) resources to do tasks.

Here’s an example of how softcores can benefit FPGA design. Consider a host machine offloading match-action tables to an FPGA-based Smart Network Interface Card (SmartNIC). In this use case, the FPGA would have to implement both data plane components—such as pipelines, buffers, and crossbars—and the control plane for setting up the different tables.

However, not everything put on the FPGA here requires highly specialized hardware to meet performance constraints. Specifically, we don’t need to build custom state machines to maintain table entries. By replacing these state machines with a CPU core, we retain the same functionality, incur an additional cost in time, but end up likely reducing resource overhead. If additional resources are freed up, they can be used to scale up the data plane, potentially resulting in more performance for the parts of the offload that actually need it. Moreover, we could make minor changes to existing software-defined networking (SDN) code then compile it for the softcore, thus saving a substantial development overhead.

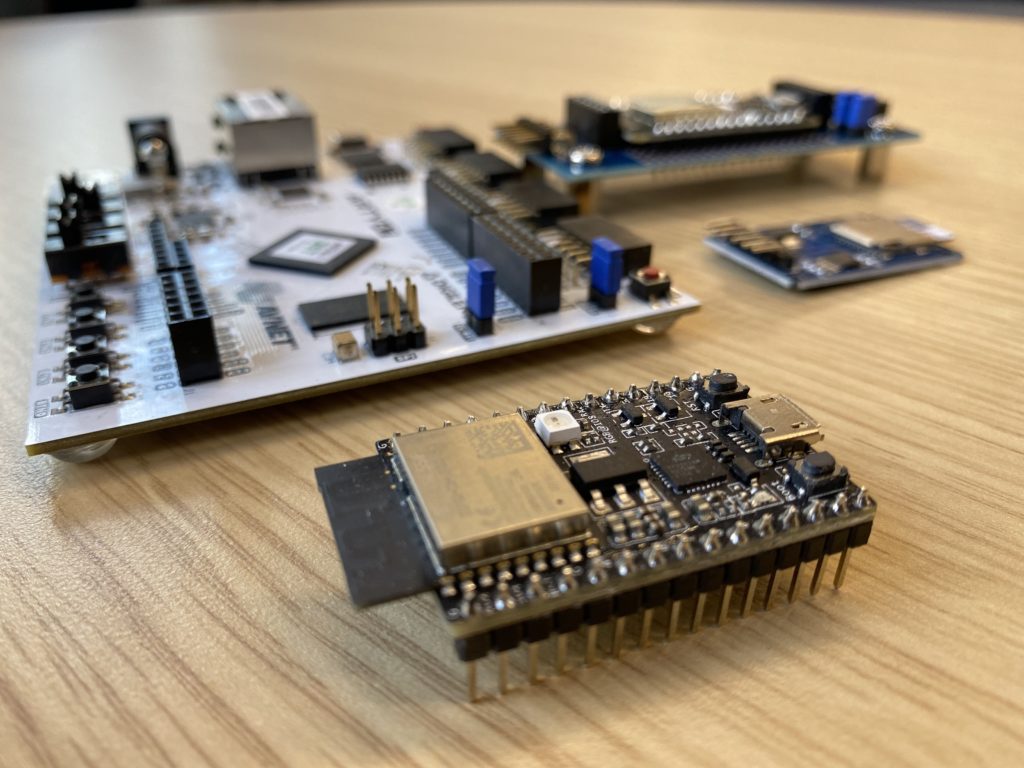

It is important to note, however, that while softcores are versatile, some functionality (such as connectivity, device control, etc.) cannot easily be done with the FPGA itself. An example of this is a full reconfiguration of the FPGA chip. Since the entire chip configuration is erased in this process, the reconfiguration has to be done by an external device. For such tasks, there are simple, cheap MCUs available in many forms and sizes that could be easily connected to an FPGA.

Consequently, since our system would need both softcore and MCU-based CPU cores, we envision using an MCU with the same ISA to make the system programming easier, because the same toolchain can be used. An example of this is the ESP32-C3 from Espressif, which is based on the RISC-V ISA.

Overall, the combination of the RISC-V ISA and FPGAs presents exciting opportunities for research and innovation. A developer can not only improve the quality of custom hardware they generate, but also take advantage of abstractions and design methodologies that make hardware development faster and more accessible for software developers.