The first Tel Aviv Red Hat Research Day event took place on March 2nd. During this session, Dr. Ilya Kolchinsky outlined the most critical challenges faced by the currently available data processing technologies, presented a new paradigm for large-scale data processing called Stream Processing, and discussed how can this paradigm be employed both inside and outside of Red Hat to address the aforementioned challenges and bring additional value to the customers.

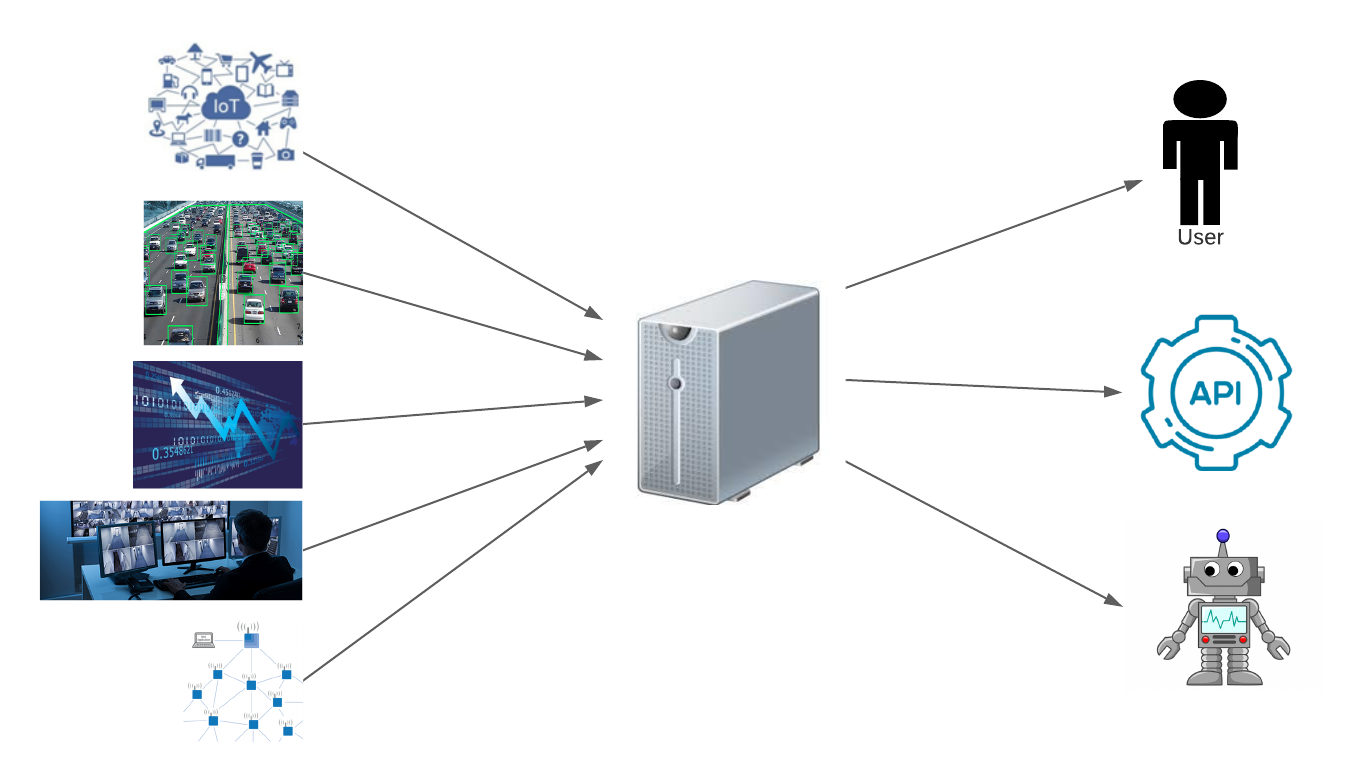

As we enter the era of Big Data, a large number of data-driven systems and applications have become an integral part of our daily lives. this trend is accelerating dramatically. It is estimated that 1.7MB of data is created every second for every person on Earth, for a total of over 2.5 quintillion bytes of new data every day, reaching 163 zettabytes by 2025. In addition to the growing volume, velocity, and variety of continuously generated data, novel technological trends such as edge processing, IoT, 5G, and federated AI bring new requirements for faster processing and deeper, more computationally heavy data analysis.

Meeting these requirements in modern applications by relying on the “old school” data processing mechanisms is nearly impossible, however. On the one hand, the high latency and I/O overhead of the traditional database systems prevent the required computation result to be available immediately in real-time. On the other hand, by simply dropping the DB we severely limit the complexity of the supported operations due to the scarcity of the processing resources. To overcome this situation, a new solution is needed.

Stream processing comprises a variety of methods for scalable and efficient data processing that do not rely on traditional databases for storing and processing the data. Instead, the main focus of these methods is on performing highly complex computations on high-rate data streams while only using minimal resources. This makes stream processing a perfect choice for implementing intensive data processing operations in real-time applications and on edge devices.

During the talk, we identified a number of tools and technologies closely related to Red Hat products which could greatly benefit from integrating stream processing solutions into their core, achieving an orders-of-magnitude performance boost. Among these examples are Kubernetes, Ceph, Elasticsearch, and Prometheus. We believe that this list could be continued and are looking forward to collaborating on these and many other initiatives.

For more information click here.