Enhancements to the education project highlight how research work on OPE drives advancements for many kinds of multitenant environments.

The Open Education Project (OPE) continues to develop solutions for optimizing GPU resource usage in a multitenant environment. OPE, a project of Red Hat Collaboratory at Boston University, has long been a pioneer in making high-quality, open source education accessible to all. The project was initiated by BU professor Jonathan Appavoo, in partnership with Red Hat Research, to break down resource barriers by making scalable, cost-effective infrastructure available for education.

Continued efforts to improve OPE don’t benefit just students and faculty, however. Advancements in telemetry, observability, and GPU management also have potential applications for non-class use cases, such as academic research and multitenant environments more generally. Among the most exciting developments is the added ability to manage requests for processing resources. The scheduler in multitenant environments is often a black box hidden from most individual users, which means jobs won’t necessarily be scheduled to keep down costs, maintain performance, or ensure that all users have fair access. By contrast, OPE-developed GPU scheduling builds in transparency and puts more control in the hands of users.

Launching GPU-accelerated learning

Over time, OPE has grown from an experimental class platform into a robust service supporting thousands of students. One of its biggest milestones this year has been the migration from a shared teaching and research environment to a dedicated, independent Red Hat OpenShift academic cluster on the New England Research Cloud (NERC), more widely known as the production environment of the Mass Open Cloud (MOC). This move provides the project for a sustainable, large-scale educational model.

This semester, OPE is supporting one of its most advanced courses to date: a graduate-level course on GPU programming, Programming Massively Parallel Multiprocessors and Heterogeneous Systems (Understanding and programming the devices powering AI), taught by Professor Appavoo. The course introduces students to the world of GPU programming. Using NVIDIA’s CUDA programming model, students learn the fundamentals of parallel programming, GPU architecture, and high-performance computing on heterogeneous systems. The course culminates in an exploration of student’s research and career interests through a group project that tackles a challenge they are interested in. With interests that range from cryptographic systems to kernel engineering to RAG, students see how they can use CUDA programming to imagine more effective, performant systems.

To support this course, the team prepared a Jupyter notebook image embedded with CUDA tools. The image was specifically aligned with the NVIDIA GPU driver versions available on the academic cluster. This eliminates one of the most common pain points in computer science and AI courses—setup and installation headaches—and lets students begin experimenting with CUDA kernels, performance tuning, and architectural analysis from day one.

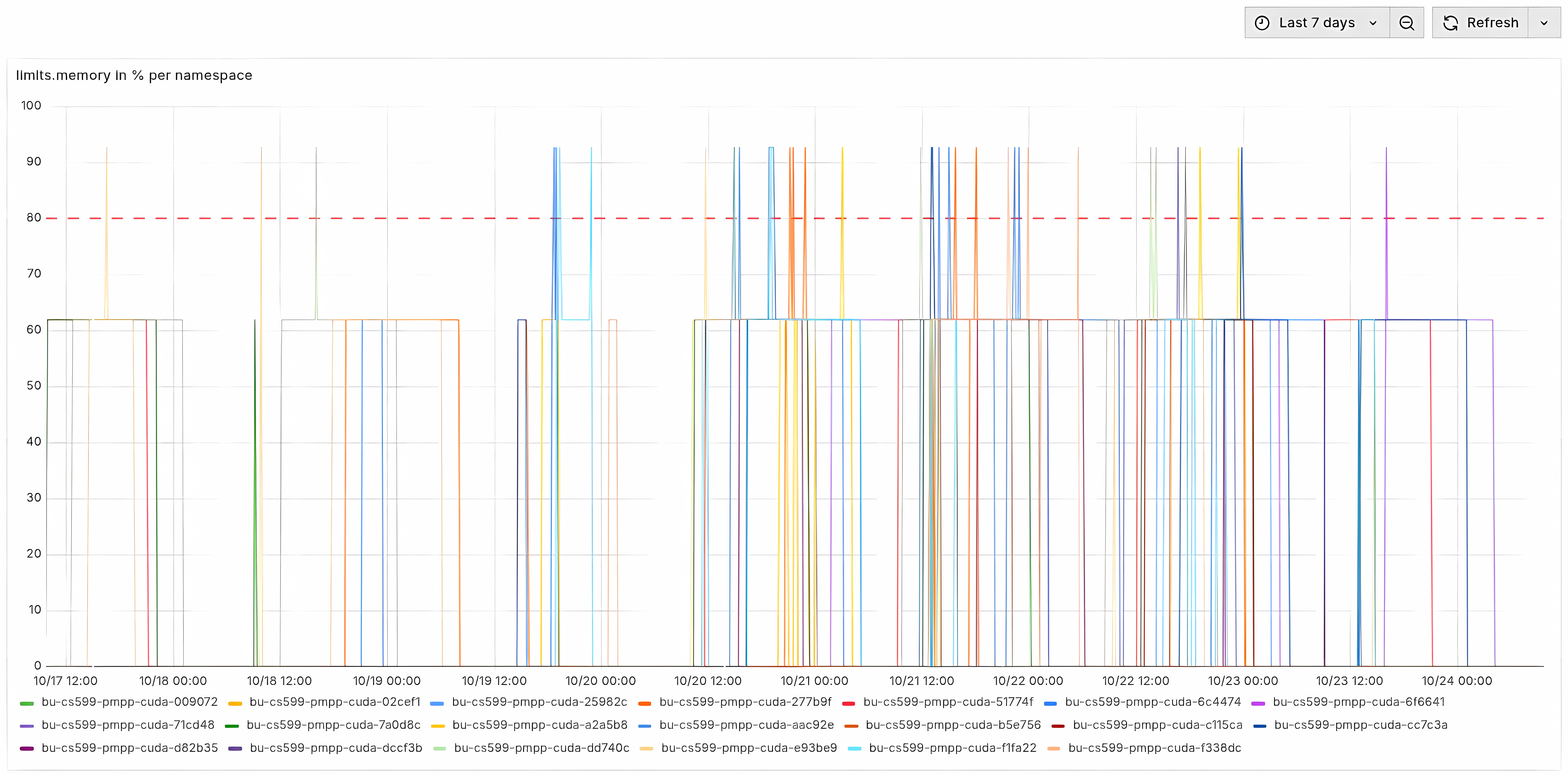

GPU metrics observability

Managing GPUs in an academic setting requires not just access, but also visibility. The academic cluster is connected to the NERC Observability Dashboard developed by Thorsten Schwesig. This dashboard integrates with NVIDIA’s DCGM (Data Center GPU Manager) to expose essential metrics such as GPU utilization, memory usage, temperature, power draw, and per-process statistics. The dashboard also includes OpenShift metrics such as Jupyter workbench and GPU pods running per namespace, requests.storage in % per namespace, and limits.memory in % per namespace. These metrics are critical for admins to track resource consumption, not only for students but also for shared research resources.

For the GPU programming class, however, observability extends beyond operational health and aggregate datacenter resource usage. Because students are expected to evaluate the micro-architectural features of GPUs experimentally, the cluster is configured to provide access to NVIDIA Nsight Systems profiling metrics. These include deeper counters like warp execution efficiency and shared memory transactions, allowing students to connect coding decisions directly to hardware behavior. Understanding hardware-software co-design will be essential for students later working in disciplines from high-performance computing and data science to AI/ML engineering. Having the opportunity to get access to this deeper level of measurement and analysis is something that typically is not available to programmers in a shared GPU environment, much less to students in a production datacenter. It is absolutely critical, however, in order to match the demands of an application to a resource profile for a project that doesn’t over-provision or under-provision compute, storage and network bandwidth as needs vary dynamically over time.

Kueue: fair and efficient GPU scheduling

In the previous OPE teaching model, every student received a dedicated CPU-based Jupyter notebook. This is now a standard tool for data scientists, and the software developers who work with them. But it is also excellent for teaching most classes: CPU resources are plentiful, inexpensive, and can be allocated on a per-user or per-workload basis without significant cost or inefficiency. However, the same approach cannot be applied to GPUs. While simple, this model has significant drawbacks:

- Costly idle time: GPUs are a premium resource, and when a student isn’t actively running a computation, the GPU sits idle on a project, draining resources from the datacenter as a whole, and money from the individual project budget. This is a massive inefficiency for any academic institution or industry collaborator.

- Limited control: This model offers less control for instructors and admins. Without a centralized way to manage and monitor resource usage, it’s hard to ensure fair access for everyone, especially when a project has a limited number of high-end GPUs, such as Nvidia H100s.

To solve this conundrum, the OPE team adopted a queue-based scheduling model using Kueue, a Kubernetes-native job management system. Instead of tying GPUs directly to student notebooks, students now use a lightweight, CPU-based Jupyter notebook for coding and development. When they need to run their GPU code, they simply submit it as a batch job from their notebook’s terminal, using simple commands.

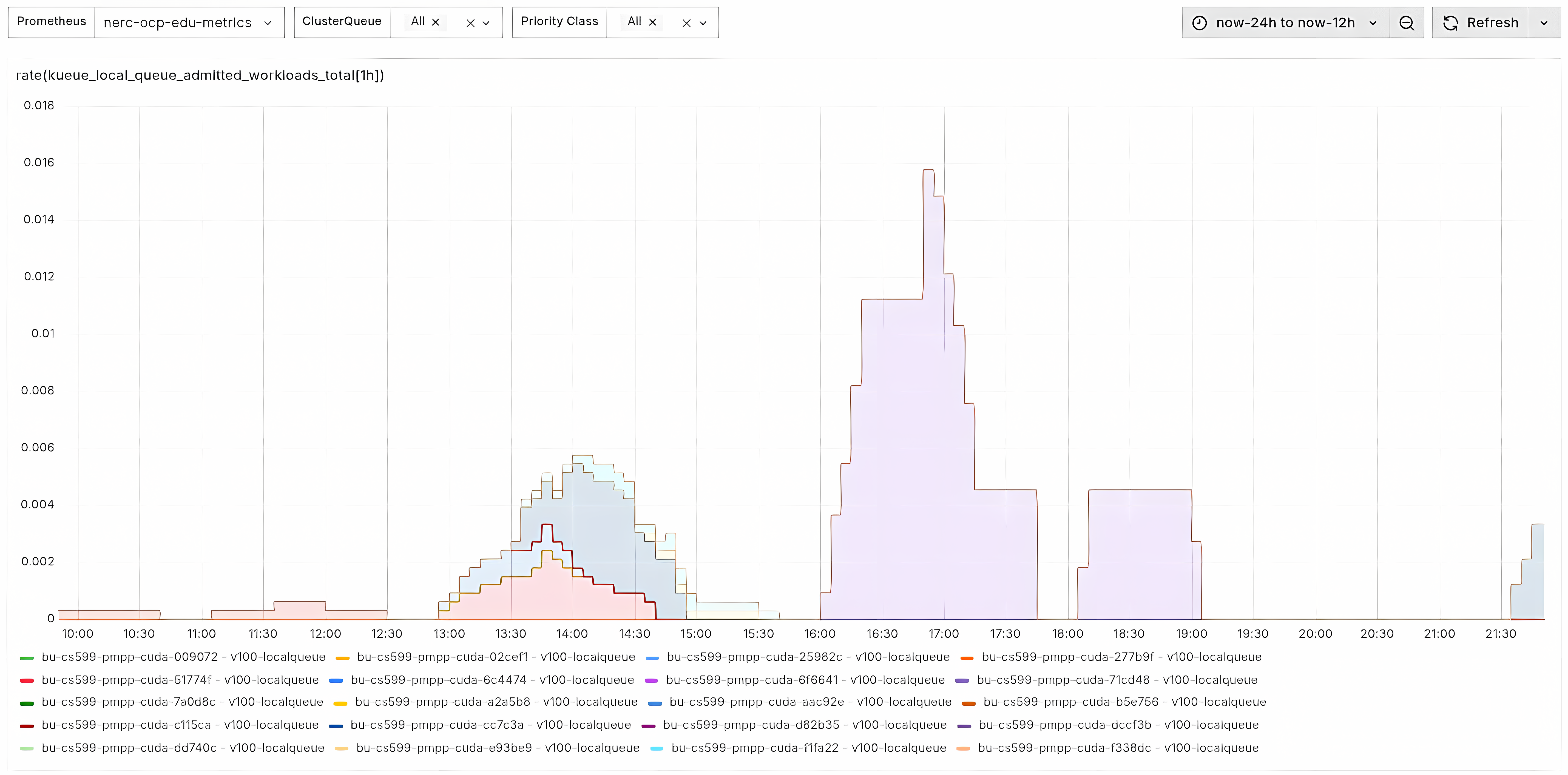

This is where Kueue comes in. Here’s how it works:

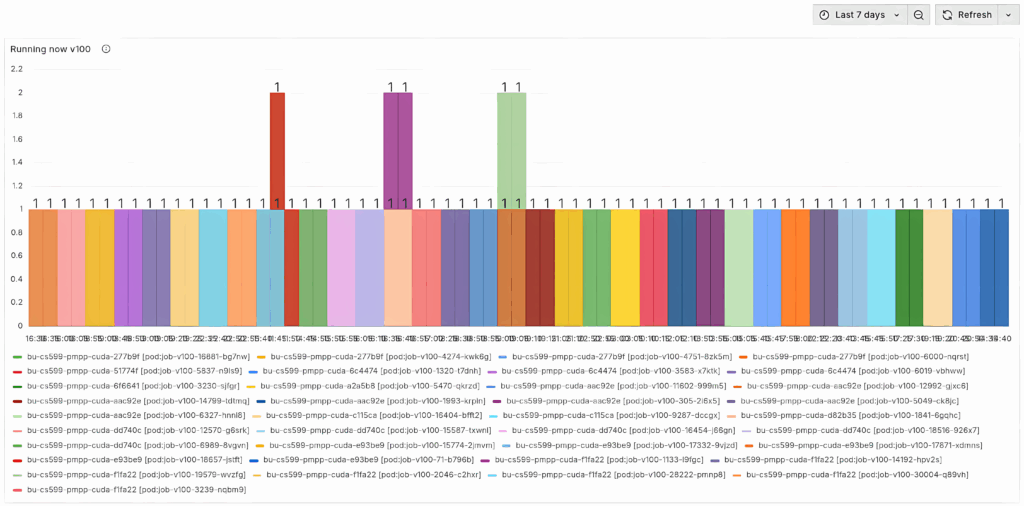

- Job Queues: Each student namespace has a LocalQueue that points to a ClusterQueue, which represents the overall pool of GPUs (Nvidia V100, A100, H100) available in the academic cluster.

- Job Execution: When a GPU becomes free, Kueue assigns it to the next pending job from the LocalQueue, triggering the creation of a pod on the GPU node. The job securely copies the necessary code files and data from the student’s development notebook to the newly created GPU pod. This creates a seamless process for coding and experimentation.

- Termination and Release: Once the job completes, or if it runs for a set maximum time, the pod is automatically terminated. This releases the GPU, making it immediately available for the next student’s job in the queue.

- Visibility: Students can track job status directly from their notebooks (Pending -> Running -> Completed), giving them visibility and independence without requiring dedicated GPU containers.

This model ensures that GPUs are continuously utilized, fairly shared across students, and centrally managed, making resource-intensive classes both scalable and cost-effective.

Expanding use cases beyond the classroom

Beyond its use in the classroom, the Kueue system for GPU batch job scheduling offers a powerful solution for a wide range of developer use cases in multitenant environments:

- Research workloads: Graduate students and faculty running long experiments can share the same GPU pool without blocking each other. A bioinformatics lab could use this to run multiple simulations simultaneously, or a physics department could manage large-scale data analysis for different research groups efficiently. The same applies to different workloads in a consulting company with many individual ML projects to complete for different applications.

- Multitenant usage: Different projects can each have their own LocalQueue while still pulling from the same ClusterQueue. This benefits large organizations with various departments or external clients, such as a cloud provider offering GPU access to multiple customers or a university managing resources for different research centers.

- Policy flexibility: Admins can set different quotas or priorities. For example, a research project might be allocated more A100s, while a class queue is optimized for quick turnaround. This allows for fine-grained control over resource distribution, ensuring critical projects receive the necessary resources while maintaining fair access for all users.

- Fine-grained access control to telemetry and detailed hardware profiling: Because measurement and data analysis are an essential part of the developer’s environment, the dashboards and collected views of telemetry provided in the OPE environment naturally support data analysis for multiple tenants or multiple projects with different access requirements sharing resources in an industry setting as well.

Additional OPE Enhancements

In addition to GPU management, the OPE team has made other key enhancements to the Mass Open Cloud environment:

Preloaded Notebooks: To streamline the learning experience, students’ development notebooks are now pre-generated rather than launched manually through the UI. Meera Malhotra developed this feature to enable each notebook to be provisioned with the required LocalQueue configuration and associated RBAC permissions, ensuring it is immediately ready for assignments and labs. Students simply log in and run their notebook without needing to perform any additional setup. This way, students can focus on experiments instead of troubleshooting environments. The same advantage can also be available for developers in other MOC projects.

Gatekeeper policies: In other courses where students launch notebooks manually through the RHOAI UI without Kueue, a software Gatekeeper steps in to enforce defined resource limits. These policies, developed by Isaiah Stapleton, provide the following specific protections in OPE:

- Restricting students to use only the class container image.

- Limiting container size selection to instructor-defined options, so students can’t overspend resources.

- Blocking student notebooks from directly consuming GPUs through the usual RHOAI interface. Instead, student developers must submit requests for computing cycles through Kueue to claim their GPU.

Notebook labeling via mutating webhook: OPE uses a custom assign-class-label mutating webhook to automatically add labels to each notebook pod, denoting which class a student belongs to (e.g., class=”classname”). This serves several purposes:

- Billing Transparency: Labels allow admins of the cluster to differentiate between students from different classes running in the same namespace, enabling accurate, class-specific billing.

- Policy Enforcement: The same labels can also be used by Gatekeeper for validating policies to enforce rules at the class level, for example, restricting images, container sizes, or GPU count differently for each course. This automated labeling webhook ensures fine-grained governance in a multi-class academic environment, but can also be used for a multi-project shared industry environment.

By moving to a dedicated Academic OpenShift cluster in the Mass Open Cloud to explore new ways of conducting classes in a stable OpenShift and RHOAI environment, while introducing GPU batch scheduling with Kueue, the Open Education Project has built a smarter way to handle expensive high-performance resources. This approach allows for students to learn on hardware otherwise closed off to most classroom settings. Students learn how to write and optimize their CUDA code, how to coalesce memory accesses, and how to handle parallel programming on a scale most students don’t get to experience.

The class culminates with an exploration of how GPU programming can intersect with students’ research interests, equipping a new generation of future engineers and researchers with a deeper understanding of the hardware behind AI. OPE gives student developers the chance to think critically about improving the software that runs in datacenters, both for their classroom exercises and for their future responsibilities as developers in a resource- and energy-limited world.