Updated on February 20, 2024. This article was originally posted January 20, 2024.

The MOC Alliance annual workshop will be held February 28-29, 2024 at the George Sherman Union, 774 Commonwealth Ave., Boston, with featured topics including the newly launched AI Alliance, cloud infrastructure for data-intensive workloads, expanding access to resources via the open cloud, systems research, and more. See the full list of presenters below.

The MOC Alliance provides a structure for a set of interrelated projects that have grown up around the Mass Open Cloud (MOC) and Massachusetts Green High-Performance Computing Center (MGHPCC). These projects include production cloud services (NERC, NESE, OSN) for domain researchers operated and facilitated by university research IT, a national testbed for cloud research (OCT), projects to enable scientific and medical researchers (Biogrids, ChRiS), and projects to engage the open source (OI labs) and system research (Red Hat Collaboratory, i-Scale) communities. Red Hat is a founding member of the MOC Alliance and co-leads the Red Hat Collaboratory at Boston University partnership.

This year the workshop is structured around four tracks: 1) AI And the AI Alliance, 2) Marriage of Data and Compute, 3) Open Cloud, and 4) Operations and Discussions. The inclusion of the AI and AI Alliance track follows December’s announcement of the launch of the AI Alliance, a group of leading organizations across industry, startups, academia, research, and government coming together to support open innovation and open science in AI. Co-launched by IBM and Meta, the AI Alliance is “focused on accelerating and disseminating open innovation across the AI technology landscape to improve foundational capabilities, safety, security and trust in AI, and to responsibly maximize benefits to people and society everywhere” and brings together a critical mass of resources to accelerate open innovation in AI.

The MOC Alliance workshop will bring together a community of research IT personnel, researchers, users, and industry engineers and developers to celebrate what has been accomplished and help define the MOC Alliance strategy moving forward. Red Hatters working on these initiatives or topics are invited to participate in the workshop. There is no cost to attend. Those with questions can email Jennifer Stacy, jstacy@redhat.com.

To see specific session times, detailed abstracts, travel information, and more, visit the MOC Alliance website.

Wednesday, February 28

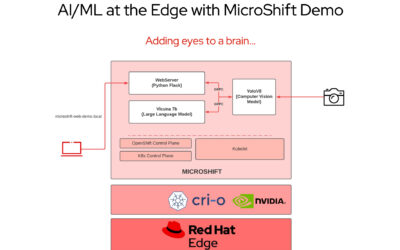

Morning—Session 1: AI and the AI Alliance

AI is expected to be one of the most demanding uses of the MOC in the near term, with the emergence of the AI Alliance, the huge demand from existing users, and NAIRR on the horizon. This session is intended to kick off the discussion on AI and help identify the role the MOC Alliance can play in supporting AI users and the AI Alliance, but all four sessions will touch on how the MOC Alliance will focus on AI in the next year.

Gloria Waters and Orran Krieger—Event Kickoff

Jeremy Eder—AI Alliance

Sanjay Arora—Learned Software Systems: Core systems software is responsible for critical decisions in computing infrastructure. The burgeoning field of machine learning (ML) for systems focuses on applying ML techniques (e.g., Bayesian optimization, Reinforcement Learning, Evolutionary methods) to learn better heuristics and build more robust systems. This talk will describe some of Red Hat’s current efforts in this area and also discuss potential future projects.

Eshed Ohn-Bar—Federated Learning

Ellen Grant and Rudolph Pienaar—AI for Medicine and Radiology on NERC

Dave Palaitis—Fourth Generation Compute @ Two Sigma: This talk covers how our supercomputing design principles have evolved over the last two decades; from a simple scheduling system managing on-premise computers to a network of purpose-built, workflow-aligned compute clusters capable of training ML models using petabytes of memory and thousands of GPUs that push the edges of training ML models with time series data. The talk raises several open questions and challenges and highlights areas where collaboration with MOC-A can help manage ML at scale, especially as applied to quantitative finance.

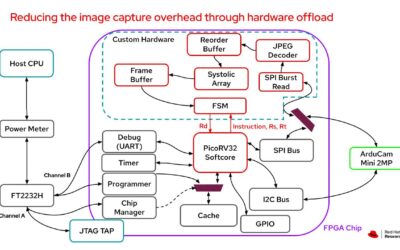

Afternoon—Session 2: Marriage of Data and Compute

There is an enormous opportunity for a cloud-focused on research and education to take advantage of the interaction between data/storage and compute in a different way from today’s public clouds. This session will review our status on NESE, Dataverse, and related capabilities and discuss how we can better take advantage of the relationship between data and compute to aid scientists using the cloud and improve the utilization of the cloud infrastructure. While an important theme here is AI, this more generally applies to all research domains.

Larry Rudolph—Here’s to a Long Marriage of Data and Compute in the Cloud

Scott Yockel—The Building Blocks of Cloud Research Enablement: While commercial cloud providers have built vast ecosystems of tools and infrastructure options, this abstraction has become quite opaque for the lay researcher; it is overwhelming, ascertaining costs is difficult, and debugging performance issues is a black box. With our decades of HPC operational knowledge and the growing need for cloud infrastructure, we have built the Northeast Storage Exchange (NESE) and New England Research Cloud (NERC). This has now given us the opportunity to support new domains of research on campus and support an ecosystem of tools and software that bring computing and data together. In this talk, I’ll provide an overview of our challenges and a vision of where this could take us in support of our university research mission.

Stefano Iacus—Bringing the Data Close to the Compute at Harvard Dataverse: Dataverse is a generalist research data repository open source software designed to support the FAIR principles (Findability, Accessibility, Interoperability, and Reuse of digital assets) and to be compliant with virtually any metadata format. We decided to exploit the versatility and cost-effectiveness of the MOC infrastructure in terms of HPC (NERC) and storage (NESE). Moreover, because MOC is an integrated infrastructure, it is possible to run computing on large data without moving data over the Internet. In this talk, we present a proof of concept of this approach where the Dataverse software is integrated with NESE and NERC realizing the goal of computing directly on large data.

Rory Macneil—The Norwegian Research Commons: A Model for NERC? In November 2023, 13 partner organizations, led by the UiT the Arctic University of Norway and including other European universities, Harvard, and developers of research tools, proposed to the Norwegian government a major project to create a generalist national research infrastructure (the Norwegian Research Commons, or REASON). REASON is the first fully fledged Commons modeled on the recently published Global Open Research Commons (GORC) model published by the Research Data Alliance. The talk will describe how REASON incorporates the elements of the GORC model, the roles to be played by RSpace and Dataverse, and how REASON could serve as a reference/model for developing the NERC. The talk concludes with an overview of plans to deploy RSpace on NERC.

Adam Belay—Transforming Production Cloud Workloads into Realistic System Benchmarks: Benchmarks are a valuable tool for evaluating systems, but only if they can accurately capture the requirements of production workloads. Several benchmarks for database applications have become industry standards because they were refined over many decades based on the feedback of commercial companies and large organizations. However, this has proven to be much more difficult for cloud applications, as they evolve too rapidly for a traditional slow refinement process. In this talk, I will explore the use of production workloads to directly evaluate cloud research systems (e.g., serverless infrastructure, data processing pipelines). The MOC Alliance is uniquely positioned to unlock this higher standard in benchmarking because it drives research and open-source development with real workloads from a production cloud.

Mark Roth—Memento: Why Good Artifact Naming Matters: Two Sigma originally developed the recently open sourced Memento as a way for researchers on the AlphaStudio platform to contribute data pipeline code. We quickly found that how a researcher organizes and names things plays a large role in delivering a reproducible research result with clear provenance. It turns out that clear naming and accurate metadata yields an added productivity and efficiency bonus: automatic caching! This talk will focus on how Memento presents an elegant solution to some of the most common data science research challenges, and will cover the current state of Memento and future research directions.

Matt Benjamin—D4N

Thursday, February 29

Morning—Session 3: Open Cloud

The MOC Alliance has been developing a new vision for an open cloud to enable different groups to stand up cloud services in a level playing field, where services can grow or shrink based on demand, system researchers and developers can discover issues and develop new services, and multiple datacenters can participate in the cloud. These capabilities are increasingly important as we look forward to expanding the MOC to address the needs of the AI Alliance and NAIRR. This session will discuss the status of what we have and the challenges we need to address to help focus our community on these issues.

Jon Stumpf—Vision and Services with ESI

Kristi Nikolla—Existing Services and What is Missing

Tzu-Mainn Chen, Naved Ansari, and Hakan Saplakoglu—ESI: Details, Status, and Switch Management Tool : In the past year, usage of ESI within the MOC has grown in exciting ways. ESI has been actively deployed within the Mass Open Cloud, overseeing the management of numerous racks of servers and switches. Researchers leverage ESI’s leasing mechanism to allocate bare-metal servers for a variety of projects. Managing a fleet of network switches in a research environment is always a challenge due to the amount of collaboration that happens between different parties. This talk will discuss using Ansible to deploy switch manifests, which are backed by git. This allows the whole network to have the powerful version control features of git, while also allowing for better collaboration when hosted on GitHub through pull requests.

Emmanuel Cecchet—CloudLab on ESI: Bare metal hardware is usually statically assigned to testbeds like OCT and NERC. With some of the OCT resources offered through the CloudLab control framework and other resources in MOC using ESI and each system expecting exclusive hardware access, it is challenging to share resources between different platforms (OCT, NERC, etc). In this talk, we will demonstrate how we can use ESI as the hardware abstraction layer to share resources between testbeds. By using ESI across the board and relying on ESI’s lease mechanism, we can share resources between MOC and NERC by implementing an ESI network stack for CloudLab. This allows any hardware to be used dynamically by any platform based on demand.

Peter Desnoyers and Isaac Khor—LSVD: Elastic Storage on the Gateway: Storage systems can be measured in two dimensions: capacity and performance. A single storage device or storage-equipped server typically provides these in a fixed ratio, with improvements in one resulting in either an increase in capital cost or a decrease in the other. Yet demands for storage capacity and performance vary widely, both across workloads and over time. Today’s disaggregated storage systems decouple storage capacity from CPU resources, allowing client computation and (static) storage requirements to be met without stranding resources of either class. Yet their designs typically couple storage capacity and performance in a ratio that must be chosen at the time of initial deployment. LSVD decouples the performance-related components of the storage system from its capacity tier, allowing performance to be provisioned independently of capacity and even elastically as workloads change over time. To demonstrate this, our evaluation shows SSD-class performance over HDD backends, using a scalable intermediate caching tier to provide scalable performance.

Chris Simmons—Getting Started with the Open Storage Network: The Open Storage Network (OSN), funded by the NSF, the Schmidt Futures Foundation, and Dalio Philanthropies, developed a storage platform to support scientific and scholarly production using cost-effective, performant systems connected at high speeds via Internet2. The OSN provides an industry-standard cyberinfrastructure service to address specific data storage, transfer, sharing, and access challenges. In this talk, we will summarize the current status of the OSN, review how to get an allocation on the storage network of up to 50 TB, and demonstrate common workflows and usage models using the S3 API.

Afternoon—Session 4: Operations and Discussions

There are many components essential to the MOC Alliance’s vision of an open cloud. The topic of operations includes education, support for systems research, user outreach and facilitation, and governance and partnerships. This session will include discussions led by some of our presenters on Operations topics, as well as our other major topics of AI, Marriage of Data and Compute, and Open Cloud, to allow our community to connect and provide feedback in real time.

Jon Stumpf and John Goodhue—Governance and Partnerships: We are interested in discussing opportunities to improve efficiency and responsiveness in how the MOC Alliance engages with current and prospective partners. In our Discussion Group time, we want to enumerate an initial set of thoughts on areas for improvement and perceived impact and urgency for each.

Chris Simmons and Milson Munakami—Fostering Cloud Adoption Using a Community-Driven Approach: In this talk, we will summarize our current user outreach strategy and propose several community-driven activities to aid with the adoption of MOC and NERC resources. We will examine the current landscape of user engagement and propose strategies for expanding outreach to a wider audience. By emphasizing community-driven solutions, we aim to not only increase short-term adoption of cloud-centric workflows, but also scale to provide a self-sustaining community of trained experts.

Michael Zink and Heidi Dempsey—Back to the Future Systems Research: We will discuss ongoing systems experiments, development, and systems research topics for 2024, touching on testbed federation, connections to large data sources for AI, mobile/edge computing experiments and tools, high-speed network interconnections like FABRIC, and development making it easier to do all these things.

Heidi Dempsey, Danni Shi, Jonathan Appavoo—Open Education Project

To register and follow agenda updates, please visit the MOC Alliance 2024 Workshop page.

- Who: Researchers, engineers, and developers working in research IT, systems research, AI, data science, and open cloud

- When: February 28-29, 2024

- Where: George Sherman Union (GSU), Metcalf Ballroom (2nd Floor), Boston University, 775 Commonwealth Ave., Boston, MA 02215