OSMOSIS: Open-Source Multi-Organizational Collaborative Training for Societal-Scale AI Systems

Note: See the Co-Ops: Collaborative Open-Source and Privacy-Preserving Training for Learning to Drive project page for details on the continuing research on this topic.

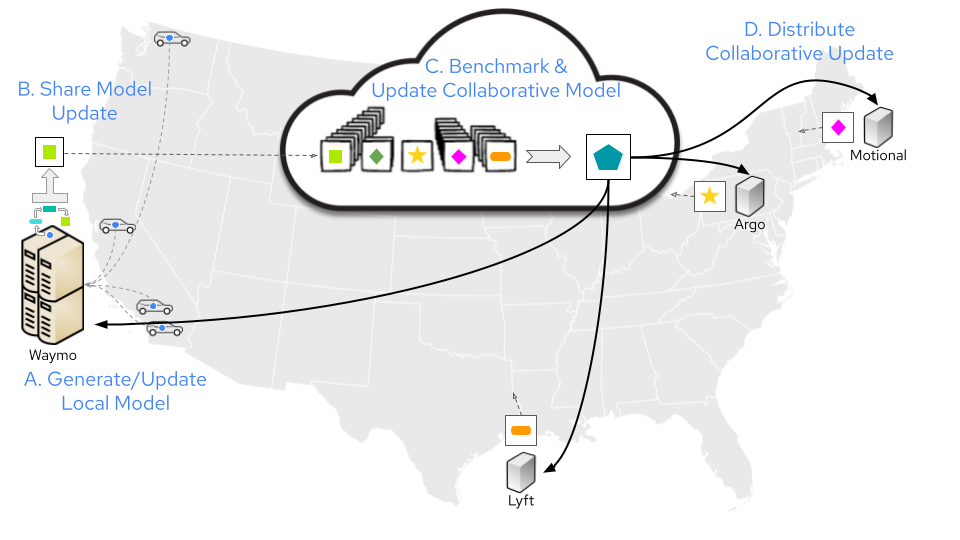

The goal of our project is to develop a novel framework and cloud-based implementation for facilitating collaboration among highly heterogeneous research, development, and educational settings. Currently, AI models for real-world intelligent systems are rarely trained as part of a collaborative process across multiple entities. However, collaboration among different companies and institutions can increase AI model robustness and resource efficiency. Towards a more efficient development process of AI systems at massive scale, we propose a general framework for AI model sharing and incentivization structures for seamless collaboration across diverse models, devices, use cases, and underlying data distributions. Through distributed sharing of AI models in a secured, privacy-preserving, and incentivized manner, our proposed framework enables significant cost reduction of system development as well as increased system robustness and scalability.

Additional Project Details

Project Team

Principal Investigator: Eshed Ohn-Bar

PhD Students: Jimuyang Zhang, Ruizhao Zhu, and Hee Jae Kim

Graduate Students: Parker Dunn, Kevin Vogt-Lowell, Peng Huang, and Zanming Huang

Undergraduate Students: Shun Zhang, Fadi Kideess, and Mariia Kharchenko

Presentations

- Open-Source Brains for Large-Scale Autonomous Systems, Greater New England/US Research Interest Group Meeting, April 2022 [WATCH]

- Personalized Interaction Policies from Heterogenous Supervision, AIR, August 2022

- How to Scale Autonomy? West Coast Alumni Leadership Council, July 2022

- Scaling Accessible Systems, Harvard talk series, Invited Talk, May 2022

- April 2022 Customized Mobility Systems, Science on Screen, Martha’s Vineyard Film Society, Invited Talk

- Scaling Accessible Systems, Human-Computer Interaction Lab, University of Maryland, Invited Talk, March 2022

- Sight Tech Global, Invited Speaker/Panelist for Accessibility of Autonomous Vehicles, 2021

- Scaling Accessible Systems, Smith-Kettlewell Eye Research Institute, 2021

- Learning Robust Navigation Policies, Mathworks, 2021

Other Notable Accomplishments

- Student Sun Zhang was awarded a Collaboratory Student Research Award to conduct research on associated sub-project “D-COLLECTIVE: Democratized Data Collection and Collaborative Training for Extreme-Scale Autonomous Systems”

- We have organized a workshop at CVPR 2022 on Accessibility, Vision and Autonomy, and have a follow-up workshop at CVPR 2023 that was accepted

Watch