Co-Ops: Collaborative Open Source and Privacy-Preserving Training for Learning to Drive

Note: This project is a continuation of OSMOSIS: Open-Source Multi-Organizational Collaborative Training for Societal-Scale AI Systems.

Abstract

Current development of autonomous vehicles, a socially transformative technology, has been slow, costly, and inefficient. Deployment has been limited to restricted operational domains, e.g., a handful of cities and routes, where systems often fail to scale and generalize to new settings such as a new vehicle, city, weather, or geographical location. How can we enable urgently needed scalability in the development of autonomous driving AI models?

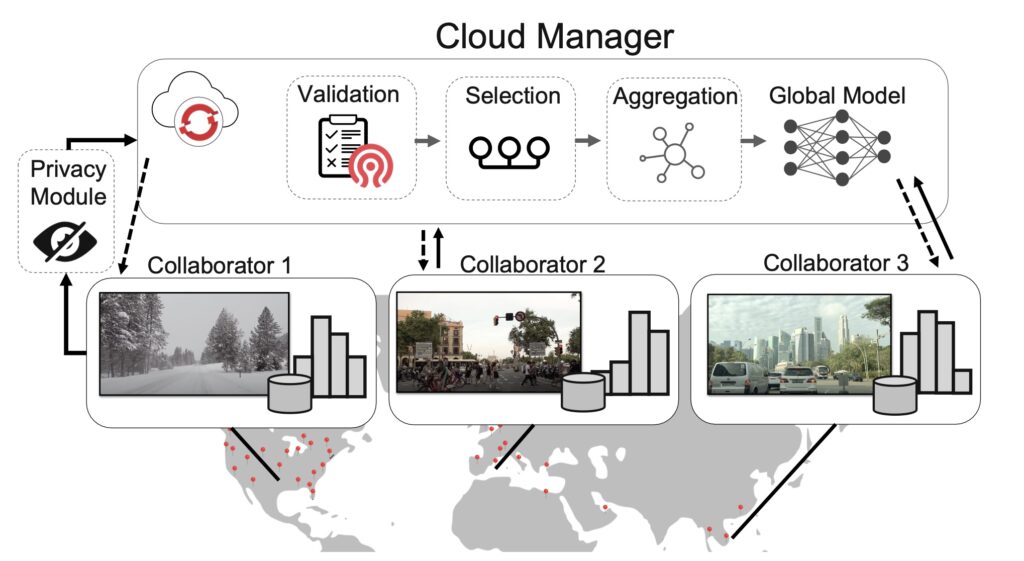

To address this critical need, our project introduces Co-Ops, a novel framework for collaborative development of training AI models at scale. Co-Ops will work towards enabling generalized, seamless and accessible collaboration among individuals, institutions and companies through two main innovations. First, we will develop a standardized and privacy-preserving platform for flexible and incentivized participation in distributed AI model training. Second, we will design principled AI models and training techniques that can effectively learn from unconstrained and heterogenous data, including different geographical locations, vehicles, rules of the road, social norms, and traffic regulations. With these two innovations, Co-Ops will enable high-capacity and open-source models that can easily scale across various locations and conditions, from Boston’s narrow roads to Singapore left-hand drivers.

Project Resources and Repositories

Related Funding

Accepted Papers (To Be Added to Publications when papers available)

- Lai, Lei, Arora, Sanjay, and Eshed Ohn-Bar. “Uncertainty-Aware Never-Ending Learning,” The IEEE/CVF Conference on Computer Vision and Pattern Recognition, June 17-21, 2024

- Zhang, Jimuyang, Huang, Zanming, Ray, Arijit, and Eshed Ohn-Bar. “Feedback-Guided Autonomous Driving”, The IEEE/CVF Conference on Computer Vision and Pattern Recognition, June 17-21, 2024

Presentations

- Community-Driven Scalability for AI: Scaling Systems with Everyone, Everywhere, All the Time, Eshed Ohn-Bar, MOC Alliance Workshop, 2024 (Community-Driven Scalability for AI video)

- Learning to Drive Anywhere, Ruizhao Zhu, CISE Graduate Student Workshop, 2024

- Accessibility as Scalability, Eshed Ohn-Bar, IBM Tokyo (virtual), 2024

- Machine Learning, Memorization, and Privacy, Adam Smith, Theory and Practice of Differential Privacy Workshop, Boston, 2023

- On the Limits of Imitation, Eshed Ohn-Bar, UIUC Vision Seminar (virtual), University of Illinois Urbana-Champaign, 2023

- Department of Transportation and FHWA, Eshed Ohn-Bar, Inclusive Design Dialogues (virtual), 2023

- Interactive Autonomy at Scale, Eshed Ohn-Bar, Robotics on Tap at BU, 2023

- The Price of Differential Privacy under Continual Observation, Adam Smith, Google Workshop on Federated Learning & Analytics, 2022

- When is Memorization Necessary for Machine Learning? Adam Smith, Rutgers, 2022

Posters

- “Towards Robust and Inclusive Mobile Systems.” Presented in Washington DC at the Yearly NSF FRR Meeting, 2023 (Ohn-Bar, Eshed)

- “Coaching a Teachable Student.” Presented in Vancouver, Canada at the Computer Vision and Pattern Recognition, 2023 (Zhang, Jimuyang, Huang, Zanming, Ohn-Bar, Eshed)

- “Learning to Drive Anywhere.” Presented at Georgia at the Conference on Robot Learning, 2023 (Zhu, Ruizhao, Huang, Peng, Ohn-Bar, Eshed, and Saligrama, Venkatesh)

- “SelfD: Self-Learning Large-Scale Driving Policies From the Web.” Presented at New Orleans, Computer Vision and Pattern Recognition, 2022 (Zhang, Jimuyang, Zhu, Ruizhao, Ohn-Bar, Eshed)

Figure caption: High-level description of a holistic platform and research challenges that will be addressed in the proposed mass collaborative open-source framework. As scalable training over heterogeneous settings is not feasible with current collaborative AI training frameworks, we will introduce and explore novel client and server-based modules for joint privacy and driving model safety optimization (i.e., model selection, validation, capacity).