PHYSICS: oPtimized HYbrid Space-time servIce Continuum in faaS

Join Red Hat Research for the next Research Days event, “PHYSICS EU Project: Advancing FaaS applications in the cloud continuum,” on November 16, 2022, from 3PM to 4:30PM CET (4PM IST, 9AM EST).

PHYSICS is a high technology European research project with total funding of about 5ML€ proceeding with a consortium of 14 international partners. The project started in January 2021 and will end in December 2023. The main goal of PHYSICS is to unlock the potential of the Function-as-a-Service (FaaS) paradigm for Cloud Service Providers (CSP) and for application developers. Specifically, it will enable application developers to design, implement and deploy advanced FaaS applications using new functional flow programming tools that leverage proven design patterns and existing libraries of cloud/FaaS components.

Furthermore, PHYSICS will offer a novel Global Continuum Layer that will help to efficiently deploy functions while optimizing multiple application objectives such as performance, latency, and cost. PHYSICS will validate the benefits of its Global Continuum Layer and tools in the scope of user-driven application scenarios in three important sectors: healthcare, agriculture, and industry. Click here to visit the PHYSICS project website.

Physics Architecture

The following figure shows the main PHYSICS architecture components, which are divided into several groups:

- Application Developer Layer

- Includes repository templates, visual workflow utilities and application components

- Semantics

- Describes the application requirements, service capabilities and functional constraints that affect deployment

- Continuum Deployment Layer

- Orchestrates deployment of functions and components across the different service edges

- Controller Toolkit

- Set of controller components for deploying resource and elasticity controllers

- FaaS Execution Layer

- Manages the deployment of functions into the infrastructure

- Infrastructure Layer

- Supports Multi-cluster service setup based on Kubernetes, including a new set of APIs and new/enhanced resource controllers that can optimize the performance of deployed functions.

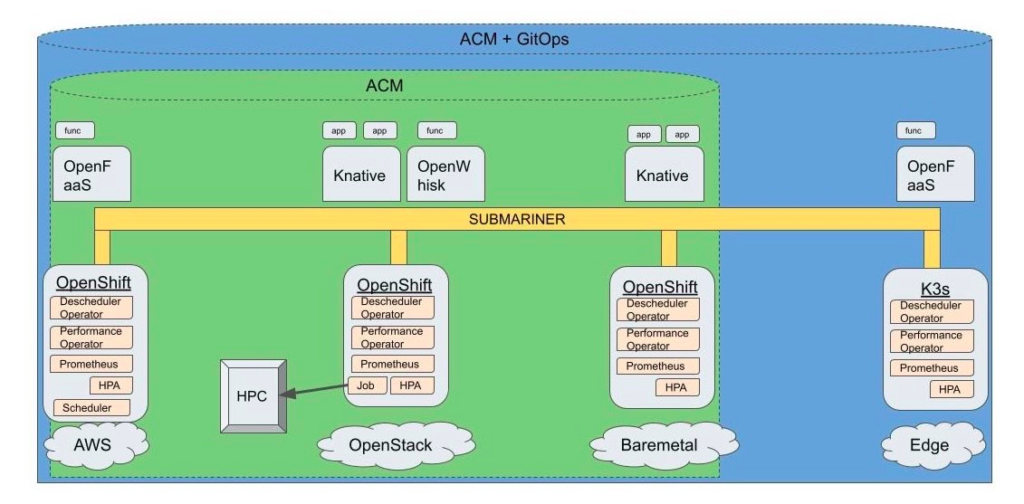

Red Hat is contributing to several tasks related to elasticity controllers, adaptable algorithms for infrastructure services and open source activities. Red Hat is in charge of one of the Work Packages: “WP5: Extended Infrastructure Services with Adaptable Algorithms”. As part of this WP, and in collaboration with the architecture definition, we have identified a set of Kubernetes components where Red Hat contributions are to be focused with the aim of providing the APIs and functionalities needed by the upper layers. The following figure shows how Red Hat plans to use open source components to contribute to the design to support the layers above the infrastructure.

In-cluster components:

- Horizontal Pod Autoscaler (HPA): The HPA scales the number of replicas of a deployment based on the resource metrics API (CPU and Memory). Red Hat’s contributions will addsupport for external metrics (i.e., enhancements to the custom metrics API), and also include more advanced mechanisms to decide on the number of replicas needed over time. For example scaling decisions based on time series analysis, ML/AI modeling,or even by considering the existing pod state.

- Cost Minimization: Public clouds introduce a new element to the application scheduling metrics and this is the cost of ongoing operations. In order to minimize the cost we want to be able to maximize the use of cheaper resources (such as preemptible instances) which are usually less reliable, while keeping the cluster reliability in place. While the traditional workloads for such resources are stateless, this work will add some stateful workloads to these resources while maintaining cluster reliability when resources are preempted.

- Descheduler Operator: One of the targets of the project is the continued optimization of the deployments over time, reacting to different applications needs, as well as infrastructure changes. The Descheduler Operator will optimize deployments after initial scheduling, reacting to new application and infrastructure status information. This work will add support for more advanced descheduler algorithms.

- Schedulers and Scheduling Policies: By default the Kubernetes scheduler is based on certain policies (e.g., filter and weight). Applying the same scheduler type to all applications is not optimal, as different applications may need different techniques. Red Hat’s contribution will add support for advanced scheduling algorithms as well as the APIs needed to be able to apply them. This will allow for different scheduling techniques, considering different metrics/values, depending on the type of application being scheduled, and/or the type of environment being managed.

- Performance Operator: It is also important how to collocate the pods on the same node. Not only deciding on the affinity/anti-affinity needs for different application components, but also once two or more pods are scheduled to run on the same node, determining how to better isolate one from the another (if needed). This includes CPU pinning, but also cpu, memory and bandwidth controls to ensure appropriate deployment of collocated applications with different performance requirements.. Development will include APIs used by components in the upper layers to configure the nodes in the required way.

Multi-cluster components:

- Submariner: PHYSICS envisions a multi-cluster environment where different types of Kubernetes clusters are deployed at different locations. Enhancements to the Submariner project will provide a way to connect applications on multiple clusters.

- ACM + GitOps:GitOps is currently being used to define the “recipe” for deploying applications and policies in a declarative manner. Red Hat Advanced Cluster Management (ACM), integrated with Submariner, will support GitOps and provide a unified networking view across multiple infrastructures.

- Low footprint Some infrastructures require a small footprint Kubernetes deployment, especially at network edges. We will investigate options such as K3s, Kind, or Single Node OpenShift for integration into the overall orchestration architecture.

– Project Coordinator: Fabrizio Di Peppo, from GFT

– Technical coordinator: Marta Patiño, from UPM

View the presentation from DevConf.CZ 2023 workshop: “Writing a K8s Operator for Knative functions”

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 101017047.

Status

Contacts

Project Resources

Publications

Related RHRQ Articles

- Unleashing the potential of Function as a Service in the cloud continuum

- Hybrid cloud, edge, and security research featured at DevConf.CZ 2023

- Publication highlights—November 2024

- Look to the Horizon: Europe’s increased focus on funding open source research is creating new opportunities

- Research project updates—May 2022

- Research Project Updates—August 2021