by Gordon Haff, technology advocate at Red Hat

Where do people come together to make cutting-edge invention and innovation happen?

One possible answer is the corporate research lab. More long-term focused than most company product development efforts, corporate labs have a long history, going back to Thomas Edison’s Menlo Park laboratory in New Jersey. Perhaps most famous of all was Bell Labs for its invention of the transistor—although software folks may associate it more with Unix and the C programming language.

But corporate laboratories have tended to be more associated with dominant firms that could afford to let large staffs work on very forward-looking and speculative research projects. After all, Bell Labs was born of the AT&T telephone monopoly. Corporate labs also aren’t known for playing with their counterparts elsewhere in industry. Even if their focus is long-term, they’re looking to profit from their IP eventually, which also means that their research is often rooted in technologies that are commercially relevant to their business.

A long-term focus is also hard to maintain if a company becomes less dominant or less profitable. It’s a common pattern that, over time, research and development in these labs come to look a lot like an extension of the more near-term focused product development process. Historically, the focus of corporate labs has been to develop working artifacts and to benchmark themselves against others in the industry by the size of their patent portfolio—although we do see more publishing of results than in years past.

The academy

Another engine of innovation is the modern research university. In the US, at least, the university as research institution primarily emerged in the late 19th century, although some such schools had colonial-era roots, and the university research model truly accelerated after World War II.

Academia is both collaborative and siloed: collaborative in that professors will often collaborate with colleagues around the globe, siloed in that even colleagues at the same institution may not collaborate much if they’re not in the same specialty. Although IP may not be protected as vigorously in a university setting as a corporate one, it can still be a consideration. The most prominent research universities make large sums of money from IP licensing.

The primary output of the academy is journal papers. This focus on publication, sometimes favoring quantity over quality, comes with a famous phrase attached: publish or perish. Furthermore, while the content of papers is far more about novel results than commercial potential, that has a flip side: research can end up being quite divorced from real-world concerns and use cases. Among other consequences, this is often not ideal for the students working on that research if they move on to industry after graduation.

Open source software

What of open source software? Certainly, major projects are highly collaborative. Open source software also supports the kind of knowledge diffusion that, throughout history, has enabled the spread of at least incremental advances in everything from viticulture to blast furnace design in 19th-century England. That said, open source software, historically, had a reputation mostly for being good enough and cheaper than proprietary software. That’s changed significantly, especially in areas like working with large volumes of data and the whole cloud-native ecosystem. This development probably represents how collaboration has trumped a tendency towards incrementalism in many cases. IP concerns are primarily handled in open source software—occasional patent and license incompatibility issues notwithstanding.

The open source model has been less successful outside the software space. We can point to exceptions. There have been some wins in data, such as open government datasets and projects like OpenStreetMap—although data associated with many commercial machine learning projects, for example, is a closely guarded secret. The open instruction set architecture specification, RISC-V, is another developing success story. Taking a different approach from earlier open hardware projects, RISC-V seems to be succeeding where past projects did not.

Open source software is most focused on shipping code, of course. However, associated artifacts such as documentation and validated patterns for deploying code through GitOps processes are increasingly recognized as important.

This brings us to an important question: How do we take what is good about these patterns for creating innovation? Specifically, how do we apply open source principles and practices as appropriate? That’s what we’ve sought to accomplish with Red Hat Research.

Red Hat Research

Work towards what came to be Red Hat Research began in Red Hat’s Brno office in the Czech Republic in the early 2010s. In 2018, the research program added a major academic collaboration with Boston University: the Red Hat Collaboratory at Boston University. The goal was to advance research in emerging technologies in areas of joint interest, such as operating systems and hybrid cloud. The scope of projects that Red Hat and its academic partners collaborate on has since expanded considerably, although infrastructure remains a major focus.

The Collaboratory sponsors research projects led by collaborative teams of BU faculty and Red Hat engineers. It also supports fellowships and internship programs for students and organizes joint talks and workshops.

In addition to activities like those associated with the BU Collaboratory, Red Hat Research now publishes a quarterly magazine (sign up for your free subscription!), runs Red Hat Research Days events, and has regional Research Interest Groups (RIGs) that all are welcome to participate in. Red Hat engineers also teach classes and work with students and faculty to produce prototypes, demos, and jointly authored research papers as well as code.

What sorts of projects?

As an example of the sorts of projects that Red Hat Research has been participating in around North America, consider the following four examples.

Machine learning for Cloud Ops

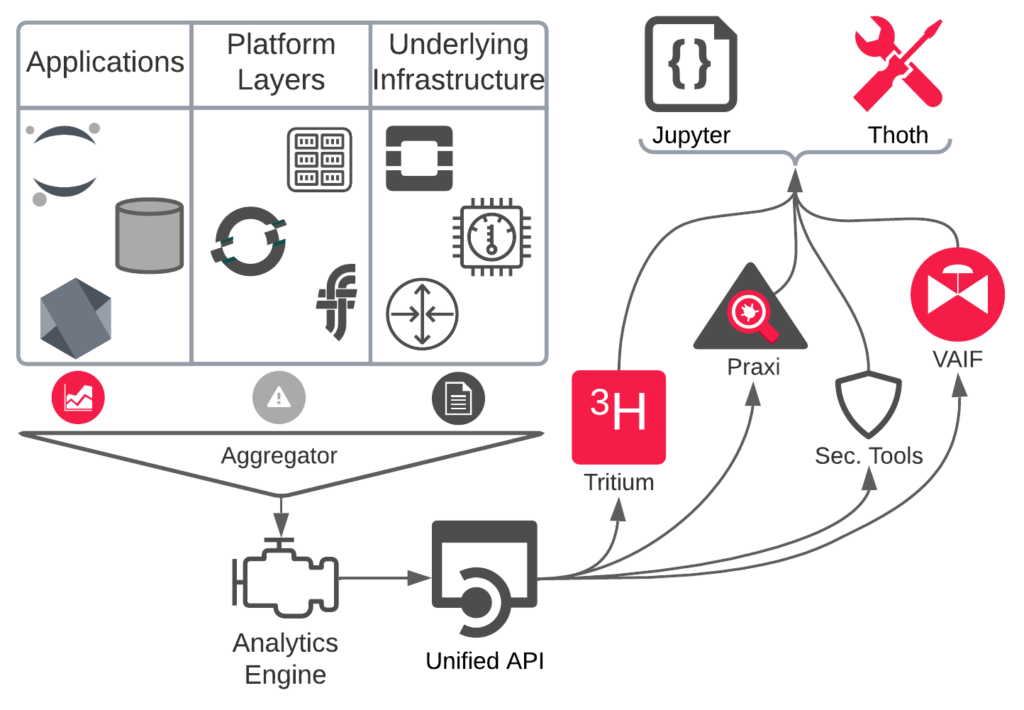

Component diversity and the breakneck pace of development amplify the difficulty of identifying, localizing, or fixing problems related to performance, resilience, and security. Relying on human experts, as we have done historically, has limited applicability to modern automated CI/CD pipelines, as relying on humans to handcraft development processes is fragile, costly, and often not scalable.

BU professor Ayse Coskun’s proposal AI for Cloud Ops was among the first recipients of the Red Hat Collaboratory Research Incubation Awards. Red Hat Research’s $1 million grant to her group is the largest award the Collaboratory has given to a single project. Among other things, she and her team, which includes Red Hatter Marcel Hild, are working on software discovery and data visualization. Two tools have already been released to the open source community over the last few years. One is Praxi, which determines whether a software installation has any vulnerable code or prohibited application in it. The other is ACE, or Approximate Concrete Execution. ACE executes functions (approximately) and creates signatures of them very quickly, then searches across libraries of functions known to be problematic, identifies them, and gives feedback to the user. Ayse was interviewed for the May 2022 issue of Red Hat Research Quarterly.

Rust memory safety

Rust is a relatively new language that aims to be suitable for low-level programming tasks while dealing with the significant lack of memory safety features that a language like C suffers from. Rust does well in this regard, but there’s a problem. Rust has an “unsafe” keyword that suspends some of the compiler’s memory checks within a specified code block. There are often good reasons to use this keyword—the Rust language developer information even describes it as a superpower—but it’s now up to the developer to ensure their code is memory safe. Sometimes that does not work out so well. The number of security advisories filed against Rust packages (in RUSTSEC) is growing.

Professor Baishakhi Ray, Columbia University, and graduate student Vikram Nitin, along with Red Hatter The (Anne) Mulhern, are researching the automated detection of vulnerabilities in Rust. The research is focused on building novel program analysis techniques to analyze existing system properties and apply advanced machine learning models to learn from those properties. Such models can then be used to help automate development, testing, and debugging of real-world software.

Linux-based unikernel

A unikernel is a single bootable image consisting of user code linked together with additional components that provide kernel-level functionality, such as opening files. The resulting program can boot and run on its own as a single process, in a single address space, at an elevated privilege level without the need for a conventional operating system. This fusion of application and kernel components is very lightweight and can have performance, security, and other advantages. There are a number of problems with building unikernels from a specialized operating system. This project aims to add unikernel capabilities into the same source code tree as regular Linux. Linux already supports a wide range of architectures and can be built in different ways depending on the target use case.

A considerable team, including BU professor Orran Krieger, has been working on this project. An initial prototype came together fairly quickly, and potential performance advantages—including up to 33% improvement on a Redis database workload—have been demonstrated. The team is currently working to see whether the relatively modest amount of code (1,500 lines) needed to implement the necessary changes to Linux can be incorporated into a mainstream Linux kernel repository.

Image provenance analysis

Readers are doubtless aware that it’s increasingly easy to create composite or otherwise altered images with the intent of spreading malicious, untrue information intended to influence behavior. Yet there are no current turnkey solutions to detect when this is happening. It can be considered a graph construction problem composed of vertices and edges, where the vertices are a component of a photograph and the edges are how images connect to other images.

The primary focus of the research by professors Walter Scheirer of the University of Notre Dame and Daniel Moreira of Loyola University, with Red Hatter Jason Schlessman, is finding related images from specific datasets or the Internet. Graph clustering has been the primary technique they’ve used. Among the project’s goals is to see whether there’s also the potential to look at metadata or other sources of information. The upstream pyIFD project has come out of this research.

The above is just a small sample of the many innovative research projects Red Hat Research is involved with. I encourage you to head over to the Red Hat Research projects page to see all the other exciting work going on.

This article was first published on October 27, 2022.