The Internet of Things brings new opportunities and new challenges for mission-critical applications where lives are at stake. Systematic testing can help.

The Internet of Things (IoT) has significantly increased the capabilities of mission-critical systems in many domains. Integrated rescue systems, healthcare, defense, energy, and transportation benefit from using the IoT, enabling faster system reactions and better functionality for users. Instant situational overview, speedier information sharing, automated decisions, and shorter response times are just some of the possible enhancements.

A fundamental vulnerability of IoT systems is their reliance on data networks. IoT typically refers to devices using a public Internet; however, for some critical or defense systems, closed and more secured networks are used instead. Interruptions to network connectivity can happen in either environment for various reasons that can’t be fully eliminated. Instead, IoT systems must be optimized to work even when network connections are weak or disrupted. Viable testing models for mission-critical IoT systems are essential for making them usable in real-world settings.

This article will introduce a technique for limited network connectivity testing and test case generation developed as part of the Quality Assurance for Internet of Things Technology project. This joint project of industry engineers and Faculty of Electrical Engineering, Czech Technical University in Prague (FEE CTU) is funded by the Technology Agency of the Czech Republic, and also led to the development of the open source test-automation framework PatrIoT.

IoT in combat

The initial version of the body sensors in the DTA project. A FlexiGuard solution by the team from the Faculty of Biomedical Engineering, CTU in Prague, is currently used. These sensors will be replaced by a smart-textile-based variant or by sensors integrated directly into the ballistic protection.

A Czech Army rescue vehicle is coming to pick up the wounded soldiers during DTA testing.

Testing of DTA in summer 2021, Czech Republic. Soldiers in the unit are securing the area, and a Combat Lifesaver (CLS) starts examining a wounded soldier (this is simulated during the exercise).

These new opportunities afforded by IoT bring with them an increase in the complexity of mission-critical systems. The system attack surface also increases along with this complexity, as does the possibility of defects hidden in the system. Especially for critical systems relying on a data network, these may have serious consequences. Network signals can be weak due to energy limitations or terrain, or, in the case of defense systems, the signal can be jammed by the enemy, or parts of the system may be destroyed. Even in these scenarios, the system must be reliable enough to keep working, and this reliability must be properly tested. It’s a critical system – lives might depend on its correct functionality!

For example, the Digital Triage Assistant (DTA), designed for military combat environments, is a potentially life-saving technology that must function in settings where disruptions are likely to occur. The DTA is a joint project of the System Testing IntelLigent lab at CTU in Prague, the NATO Allied Command Transformation Innovation Hub, the Czech Army, the University of Defense (Brno, CZ), Johns Hopkins University, and other partners. This project creates a sensor network collecting data about soldiers’ vital signs to maximize their survival chances if they are wounded. The data are aggregated to a mobile back-end server, and soldier and unit status is estimated. Then the data are provided to different roles. A Combat Lifesaver wearing augmented reality glasses will see the positions and status of wounded mates through the forest or smoke. Unit commanders can see the positions of their soldiers in a map application. A surgeon waiting for a medevac to come to a dressing station can be better prepared by learning some indicative information about a soldier’s health before they arrive to be examined.

Everything in this system can be mobile or disrupted. Soldiers move and can take cover, limiting the sensor signal. The back-end unit can be mounted to a vehicle and moved, and some units may require stealth mode. These are extreme examples, but in other critical IoT systems, network disruption situations happen on a daily basis. Weakly covered rural areas, no network coverage in tunnels in urban areas, cyberattacks, or even switching off a public mobile network for security reasons are all frequent causes of signal interruption.

Test case creation

How to test an IoT system to be sure it works well in these situations? As test engineers, we are interested in two principal situations. What happens when the network connectivity is interrupted? And then, what happens when the connectivity is restored? We need to test these situations thoroughly.

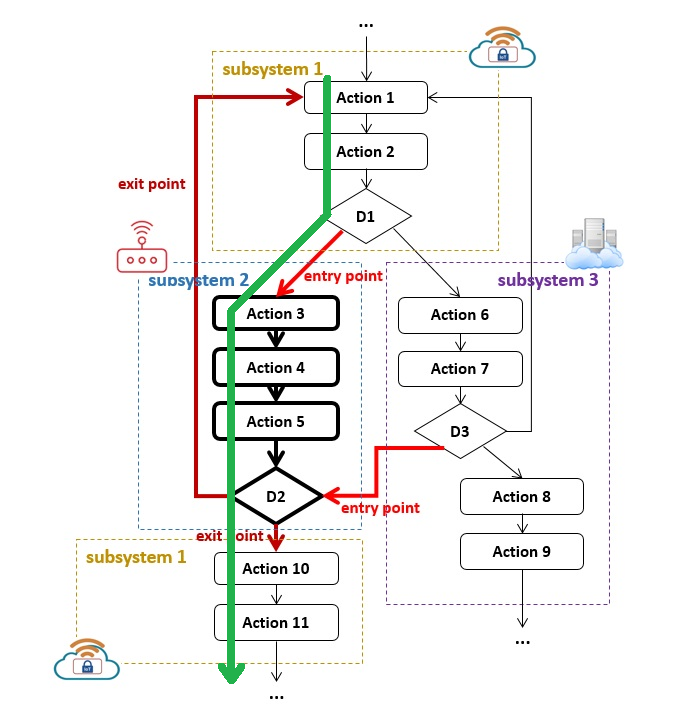

The approach we use is model-based testing. We model an aspect of the tested system, in this case, a process to be tested by a model that is very similar to the UML activity diagram. Figure 1 is a model of a tested system. In this example, subsystems 1 and 3 are connected to a stable network. Subsystem 2 is mobile, and its network connectivity can be interrupted. The entry point depicts the part of the process in which connectivity can be disrupted, and the exit point is where connectivity is restored. In tests, we try multiple possible sequences of entry and exit points in the schema. A green arrow depicts one possible test case.

The challenge we face is that we never know in which part of the system process such a disruption might occur. Using an automated test case generation tool for all scenarios is cost-prohibitive. We developed a new approach, creating unique algorithms to generate optimal—that is, the most relevant—test cases using the open source Oxygen platform. In the future, we will add more algorithms and generalize the technique to component failover testing, which will apply to more situations in critical systems testing.

To generate the test cases, we created a set of various algorithms, differing in their principles. In this scenario, a test case is a path through the system process. This portfolio comprises algorithms using classical graph traversal as well as AI-based representatives such as artificial ant colony optimization or genetic algorithms. For example, in the ant colony algorithm, we simulate artificial ants mimicking real ant behavior in nature. When ants find a discarded sandwich in the forest and they like it, they make a trail from their nest to the food source. To keep their mates on this trail, they deposit a pheromone path. The algorithm simulates this behavior. Artificial ants go through the tested system model and calculate the close-to-optimum set of tests together.

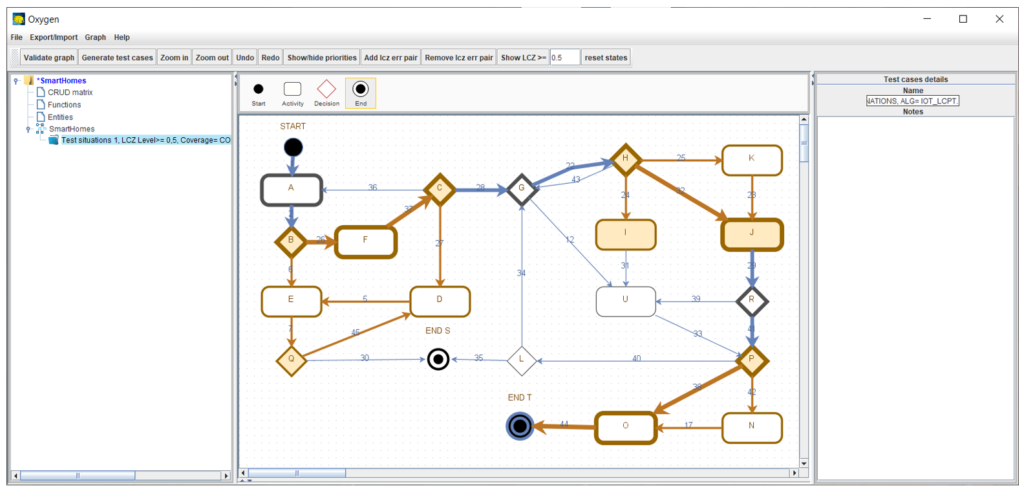

In the model, we estimate the probability that individual system components can be disconnected from the network. Using a threshold, we then model some realistic situations where this happened. To guarantee the strength of generated test cases, we use four levels of so-called test coverage criteria, a set of rules that the test cases must satisfy. Figure 2 is an example of tested system processes in Oxygen. Parts of the process likely to be disconnected from a network during the system run are visualized by brown parts of the model. When the test cases are computed, they can also be shown in the model. In this example, it’s a bold path through the schema.

For example, in the DTA project, we tested a situation in which a soldier’s ballistic protection, combined with hilly terrain, weakened the sensor’s radio signal. Data flow became intermittent and was combined with a suboptimality in the server-side code. This led to an unnecessarily long timeout to restore the soldier’s position in the map application once the signal was stronger. Because such disconnection can happen in many parts and variants of the process, it is much more effective to model the process and test these possibilities systematically than trying to simulate these situations randomly.

Implementation

However, these are details that test engineers do not need to consider to make use of the testing. From the engineers’ viewpoint, they can model a system process in a graphical user interface, add information about the probability of network connectivity outage, simulate a particular situation, and let the machine do all the computations. When finished, they can visualize the produced test cases in the model, which is helpful for getting a good overview of the tests. The test cases can be exported in open formats based on XML, CSV, and JSON to be easily loaded into a test management or test automation tool.

With this systematic approach to testing and generating test cases, we can greatly increase the confidence that a limited network will not cause unexpected problems in the real-world operation of an IoT system.

Acknowledgments

Photo source: NATO Multimedia