Things at Red Hat Research have been racing forward lately as we begin seeing the results of work we started years ago. Already in 2023, we have successfully completed the OpenShift deployment at the New England Research Cloud, the Unikernel Linux paper was accepted at EuroSys 2023 (the premier conference on system software research), and many other exciting research results are approaching publication. So why pause now to reflect? Leaving aside the human temptation to group things in fives, RHRQ editor Shaun Strohmer and I felt it was important not to let much more time go by without reviewing the accumulated work of the last five years to see what we could learn from our successes, and even our failures. So we invite you, our readers, to join us for that review.

Before we get to the retrospective, however, let me review our regular technical section. In this issue we feature three interesting research results and a piece on a new way of composing instructional material for the college classroom. I’ll start with the last thing: I believe this new project, called the Open Education Platform, has the potential to change the way we teach at the university level at least as much as the move from chalk to electronic slides—and hopefully with better effect. Developed by Boston University professor Jonathan Appavoo and improved on by Red Hat Research programmers, this new method uses a set of extensions to an AI process definition tool called Jupyter Notebooks to create interactive “slides” for teaching.

Much more than a simple slide deck, these presentations have text, graphics, and live terminal windows and editors to allow students to do their work right in the context of the lecture a professor is giving. They can also save that work directly to a git repository so that it can be retrieved and expanded on later. The entire set of course materials resides in a git repository as well, so it can be open sourced and added to or updated following standard open source practices. The potential for real collaboration around course materials is finally here with this work, and I strongly encourage you to check out both the article and the tools themselves.

Getting back to those research results though: we seem to be focusing on optimization in this issue, and we have two exciting developments to report. The first is about using machine learning models for performance tuning at the Linux kernel and device driver level. I’ve been told that a typical modern network interface card (NIC) has something like 5,000 tuneable “knobs,” of which only a few hundred are even exposed for tuning in the Linux kernel—and those are mostly set by general-case heuristics that may be wildly inappropriate for the workload being run. In Boston University PhD Han Dong’s thesis work on his new project BayOp, he attempts to apply Bayesian optimization via machine learning to the tuning of just two of those parameters in place of the heuristics that were there before. He has found that dynamic optimized tuning can dramatically reduce a NIC’s power usage without affecting its throughput, simply by smoothing out the incoming packet flow. While this is impressive in itself, the point is not simply that we can make NICs more efficient with this specific tool; rather, the work shows us that significant gains are available across the set of tuneable parameters in an OS when we bring machine-learning-based optimization to bear.

Continuing down the optimization path is a piece on a much higher level of abstraction: a model and a set of tools for optimizing an entire application’s use of cloud VMs to provide the required performance for the least cost possible. The Cloud Cost Optimizer, detailed in RHR researcher Ilya Kolchinsky’s article of the same name, gathers updates on cloud pricing, including spot prices, and produces recommended deployment configurations for a given cloud app. For researchers, students, and even commercial users looking to keep their cloud spending under control and get the best possible performance out of their cloud application, the Cloud Cost Optimizer is quite useful. It is also yet another interesting use of machine learning to solve an otherwise difficult systems problem.

I promised a retrospective in this special magazine issue, and I don’t think you will be disappointed. Our “Perspectives” section, special for this anniversary issue, is a detailed look back at what we’ve done at Red Hat Research since we launched it in 2018. From security to reconfigurable hardware to our own production cloud, we’ve reviewed all the high points of the last five years and looked toward promising future developments. To be honest, I was astonished when I read through all the surveys and remembered everything we’ve done. It has been a very busy time filled with a lot of really interesting work, and I’m grateful to have been part of it.

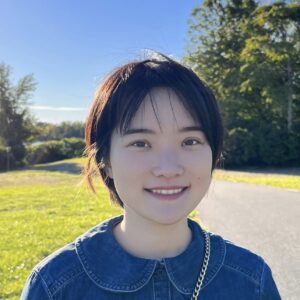

On that note, it seems like a good idea to thank the people who got us to this point. They include the whole Red Hat Research team, who spend their time not only working on code and submitting it upstream, but also writing articles and papers so that people know about it; our editor Shaun Strohmer, who keeps this magazine on track at a very high standard; our research partners, in particular Dr. Orran Krieger of Boston University, who keeps us all inspired; and most importantly Paul Cormier, the Red Hat president, CEO, and now Chairman, who saw an opportunity to bring research ideas into open source and gave me the mandate and the funding to pursue it. Paul and Orran grace the cover of this issue, and deservingly so because without them, none of this would have happened. The whole RHR team and I will remain in their debt.