This year’s conference showcased the many flavors and functions of edge computing.

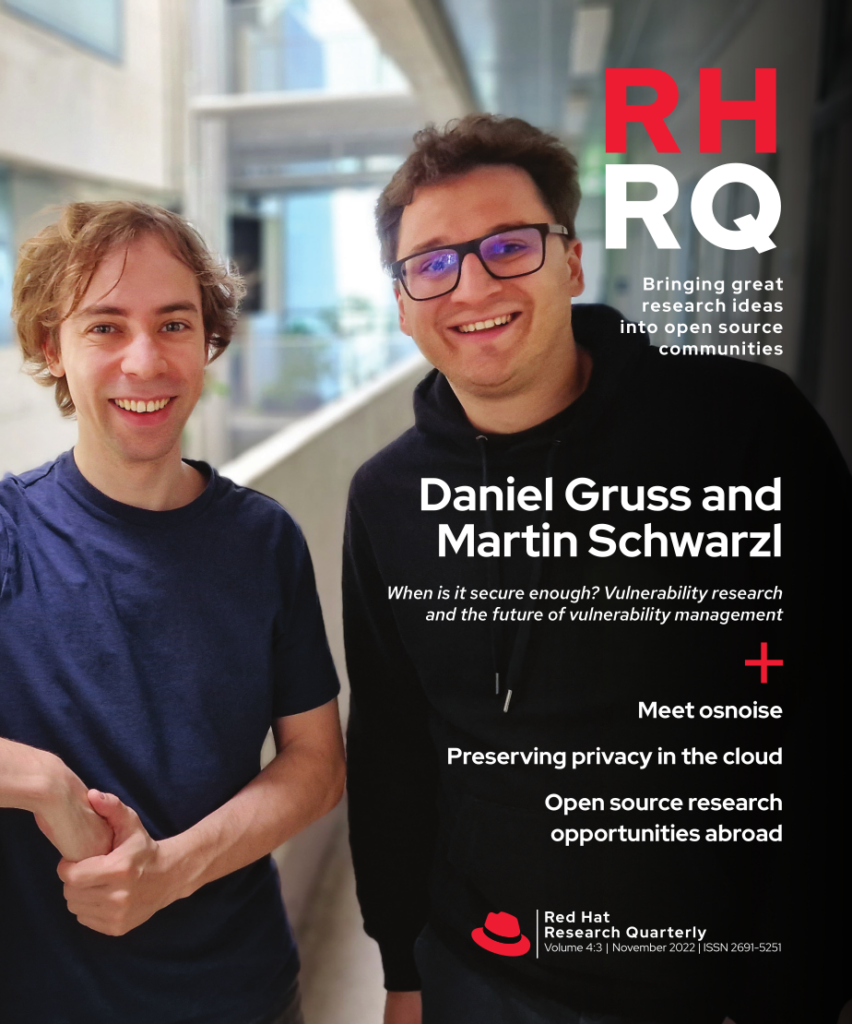

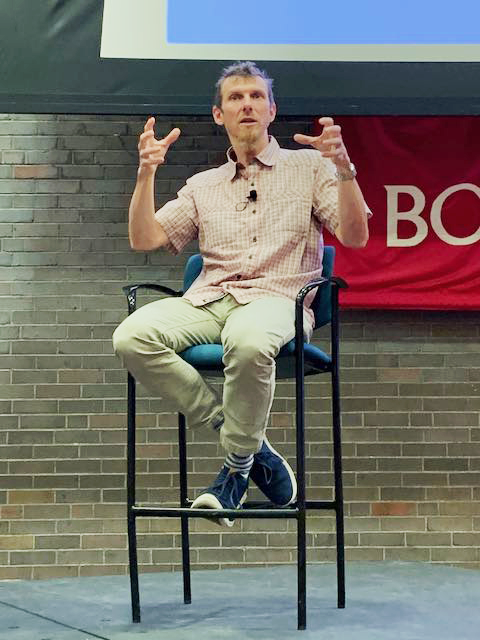

Now in its fifth year, DevConf.US was back in person at Boston University this past August. Aimed at community and professional contributors to free and open source technologies, DevConf.US included talks and plenty of informal discussions about the usual wide range of topics, including containers, serverless, GitOps, open hardware, growing open source communities, supply chain security, and more. However, one of the most discussed topics—especially in Red Hat CTO Chris Wright’s keynote—was edge computing.

As Chris put it, edge is a “big distributed computing problem that’s about bringing compute closer to producers and consumers of data.” It’s driven mainly by the massive amounts of data collected from sensors and the desire to process and act on that data—much of which can’t be sent back to a cloud for cost, bandwidth, or latency reasons. (There are also often restrictions on where data can be stored for regulatory reasons.)

The range of edge talks made clear that edge isn’t a singular thing. To a retail chain, edge may mean what used to be called remote-office/branch-office (ROBO). To a telco, a platform for software-defined networking. To an automaker, computers in a car. To a manufacturer, industrial control systems.

Like any large distributed system, edge architectures can get complicated. Red Hat’s Ishu Verma and Chronosphere’s Eric Schabell introduced portfolio architectures, which provide a common repeatable process, visual language and toolset, presentations, and architecture diagrams for edge and other complex deployments. They document successful use cases deployed at multiple customer sites using a variety of open source technologies. The architectures are themselves open source, and they document, for example, a 5G Core deployment with a logical view and a schema or physical view—along with a more detailed look at the individual nodes or services.

One common theme in many edge discussions is the need to work with resource-constrained hardware, given that edge installations often have quite limited cost, power, and space budgets. They may also have to deal with intermittent network connectivity. Red Hat’s Hugo Guerrero discussed how frameworks and runtimes like Quarkus allow existing cloud developers to reuse their Java knowledge to produce native binaries that can run with resource-constrained devices. He also covered how event-driven architecture helps applications continue running locally and then synchronize data when connectivity is available.

Another example of working with small devices, such as a Raspberry Pi, was covered by Red Hat’s Jordi Gil in his talk about Project Flotta, which provides edge device management for Kubernetes workloads. Containers on the edge was a popular topic and an area of active engineering investigation. For example, there are slimmed-down versions of Kubernetes for specific edge use cases, and further work continues. Multiple variants are needed because different use cases have different assumptions and requirements around factors like available resources, network reliability, and high availability.

Today, we’re swinging towards more decentralization.

But is Kubernetes, which even in slimmed-down form consumes a certain amount of resources, even needed? Both Sally O’Malley and Dan Walsh of Red Hat provided a look at some of the current explorations into managing edge workloads in the most constrained environments using Podman without Kubernetes.

Managing edge devices is not an easy problem. Dan raised multiple questions that have to be answered: How do I update hundreds of thousands of nodes? What happens if the update fails? How do I update all of the applications on these nodes? How do I add and remove applications to these nodes after they have been distributed? How do I make sure that these computers are safe and secure? He also described some approaches his team is working on to find answers to these management challenges without compromising the security of the edge devices.

Sally described one of these approaches in her talk. FetchIt is a tool for remotely managing workloads with Git and Podman but without requiring Kubernetes. Podman provides a socket to deploy, stop, and remove containers. This socket can be enabled for regular users without needing privilege escalation. Combining Git, Podman, and systemd, FetchIt offers a solution for remotely managing machines and automatically updating systems and applications.

Edge technology reflects the IT industry’s long-term pendulum swing from centralization to decentralization and back again. And, as Chris Wright put it, “Today, we’re swinging towards more decentralization.”