Honeywell and Red Hat have been collaborating with both the Faculty of Informatics from Masaryk University and the Faculty of Information Technology from Brno University of Technology on verification research for many years. These universities made Honeywell and Red Hat aware that they share the same business need: an automated detector of software defects. We joined our forces and started a three-year Automated Formal Verification project (AUFOVER) in 2019, co-funded by the Epsilon program from the Technology Agency of Czech Republic. Here, we share our results so far.

Both Red Hat and Honeywell focus on detecting safety defects in C and C++ source codes, like arithmetic errors, illegal memory accesses, or control flow errors. While Red Hat is focused on huge code coverage and verification speed, Honeywell needs to make sure that its safety-critical software does not have even very rare bugs that are impossible to detect by commonly used unsound static analyzers.

All four AUFOVER partners are developing the following verification tools: Verification Server and Client Application, Scmock Plugins, DIVINE, and Symbiotic and Testos frameworks. While university tools DIVINE, Symbiotic, and Testos are based on formal mathematical methods, industrial tools encapsulate these into unifying distributed platforms that automate executions of the underlying tools. Honeywell tools are integrated based on Open Services for Lifecycle Collaboration specifications, which provides interoperability by defining how to integrate tools using linked data and REST API.

While formal verification tools are able to detect various defects, they are very difficult to apply for most developers—if they even know about their existence. Therefore, our approach is to offer automated formal verification as a service and let the formal verification tools compete as to which can detect the most defects fastest and with highest confidence. Then the service aggregates all results and interprets the defects for the engineers. This approach is superior to running the complementary tools individually, since even the formal methods experts cannot predict which set of tools will be the most efficient and which tool parameters are the most optimal.

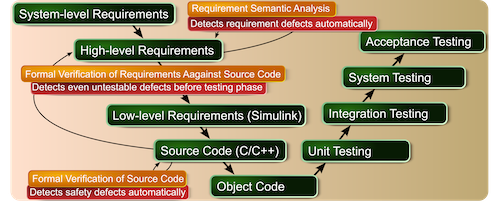

We focus on providing the following fully automated verification services:

Formal verification of source code: detection of defects that are failures in general, irrespective of any requirement, such as divisions by zero, buffer overflows, dereferences of null/dangling pointers, data races, or deadlocks. The problem with state of the art unsound static analyzers is that they report large numbers of false positives without guaranteeing that defects will be detected. Analyzing these potential defects is very time consuming. This is non-value-added activity for the developers—and they commonly introduce new errors during their trial to remove the false defect. This often creates distrust for these tools, so that developers may stop using them entirely.

Sound formal tools, on the other hand, are more difficult to automate and do not scale well. This is one of the reasons why running all the verification tools at once in parallel is a huge benefit. Formal tools will also automatically analyze potential defects from the unsound static analyzers and will remove false positives using witness checkers or witness validators. One possible approach is to run the potential defects through the Symbiotic tool, which slices the source code to relevant parts only and removes some false positives. This, in turn, increases the trustworthiness of the resulting verification report, while keeping the overall scalability of the formal verification.

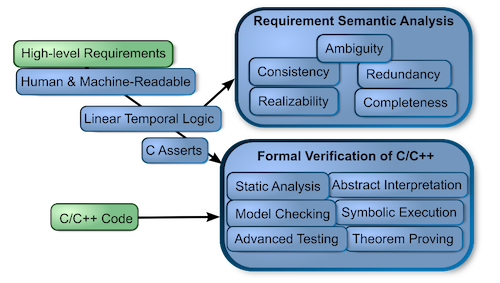

Requirement semantic analysis: detection of defects in high-level behavioral requirements. Formal specification is very difficult for the engineers to create and very expensive to maintain on top of human-readable requirements, which are mandatory for safety-critical domains like aerospace. We have learned that requirement patterns and especially special requirement standards that allow engineers to naturally author requirements that are both human and machine readable are key enablers.

Ending up with formal specification and using the specialized formal methods tools is not enough. Our system also needs to interpret the defects to the user and explain why it is a problem, using specific examples. For example, when a set of requirements could be realized by a system that ignores some requirement conditions, an engineer may not understand why that is a problem from seeing an artificially generated transition system with thousands of states.

We can detect the following defects:

Ambiguity: For example: “TrustVector1 or TrustVector2 has been less than 100 for 2 seconds” is an ambiguous part of a requirement. It is unclear if either condition should hold at any given time continuously for 2 seconds or if at least one condition should hold independently.

Inconsistency: A set of requirements is logically consistent when no subset is contradictory for any evaluation of variables in time.

Redundancy: A set of requirements implies another set, or some condition of a single requirement implies another.

Realizability: Requirements are realizable by a non-trivial system and relatively complete when a system can be created that satisfies all requirements, does not restrict any input on top of the restrictions already introduced by the requirements, and no output could remain constant forever from the very beginning.

Missing requirements: some behavior of a system is not constrained at all.

Formal verification of requirements against source code: verifies whether the source code satisfies the requirements optimally for any possible combination of input values in time. We automatically translate all the constrained formal requirements to Linear Temporal Logic, and we translate most requirements to transition logic with C Asserts that can be verified by most of the formal verification tools. Therefore, when any formal verification tool finds a combination of input values in time that result in assert failure, we can show this counterexample to an unsatisfied requirement or a part of a requirement to the user. Since we focus mostly on reactive systems, it makes sense to report to the engineer proof that the system satisfies given requirements for any possible execution of the system for at least a constrained time interval, since most of the complex systems properties cannot be completely proven.

We are benefiting from the already standardized API for the tools that compete in the Software Verification Competition (https://sv-comp.sosy-lab.org/) and similar competitions. In Software Verification Competition, Symbiotic and Predator (a part of Testos framework) tools consistently received the gold and silver medals for the last three years in the memory safety category. However, we also integrated several other tools, since some may outperform overall winners for specific systems. While advanced testing from Testos does not provide proofs and guarantees, it scales well and can find rare defects, for example using white noise insertion, other methods cannot, especially in parallel systems.

Benefiting from collaboration

In the first half year of this project, we evaluated these tools’ performance on various types of industrial systems. Honeywell appreciates that universities are improving and customizing their tools to enable formal verification of highly complex industrial systems. Universities benefit from getting feedback on which of their methods to focus on and which problems are most significant in industrial systems.

We often found that we did not need to report a bug or a need for improvement in the formal tools since Red Hat had already done it a few days earlier. However, this is not the main benefit of our cooperation. We value Red Hat experience with both common static analyzers that scale very well and normalization of verification reports.

There is a huge potential for growth of our automated distributed verification system. We plan to extend it with improved scheduling of verification tasks, automated test case generators, security auditing software, and verification augmented with artificial intelligence. There is also a possibility to offer our verification service for other companies and users. Our engineers would like it if the verification also generated how to fix the defects and even automatically generated the system from the requirements. While this is possible for some simple systems, it could be computationally infeasible for even a few requirements.

At the end of the project, we will demonstrate the benefits of the integration of formal verification into our software development lifecycle. The benefits from discovering defects as early as possible during requirement authoring are appreciated by the engineers the most. However, it is difficult to compute the cost savings from this effect. The main benefits are expected on projects where the verification service can be deployed seamlessly using continuous integration; that is, whenever the requirements or source code changes in the repository, the verification report is automatically created.