Red Hat Research Quarterly

Machine learning for operations: Can AI push analytics to the speed of software deployment?

Red Hat Research Quarterly

Machine learning for operations: Can AI push analytics to the speed of software deployment?

RHRQ asked Professor Ayse Coskun of the Electrical and Computer Engineering Department at Boston University to sit down for an interview with Red Hatter Marcel Hild. Professor Coskun is one of the Principal Investigators on the project AI for Cloud Ops, which recently won a $1 million Red Hat Collaboratory Research Incubation Award. Their conversation […]

RHRQ asked Professor Ayse Coskun of the Electrical and Computer Engineering Department at Boston University to sit down for an interview with Red Hatter Marcel Hild. Professor Coskun is one of the Principal Investigators on the project AI for Cloud Ops, which recently won a $1 million Red Hat Collaboratory Research Incubation Award. Their conversation delves into the need for operations-focused research on real-world systems and the capacity of more mature AI technology to solve problems on a large scale.

Marcel Hild: Let’s start with learning a bit about you and how you got where you are now. You grew up in Turkey and then moved over to the United States, yes?

Ayse Coskun: Yes. I went to college in Turkey as well, to Sabanci University in Istanbul, and I completed a degree in microelectronics engineering. Originally I wanted to pursue a PhD in circuit design, because that was familiar, but one thing led to another and I landed in the University of California San Diego’s Computer Science and Engineering department. I started working at the intersection of systems, electronic design automation (EDA), and computer architecture, which is where most of my work still lies.

My PhD focused on finding better ways to manage the temperature and energy efficiency of processors, particularly multicore processors. For three years of my PhD I was also working part-time at Sun Microsystems (later acquired by Oracle). It was a really sweet spot: I could do my research but still learn about industry, get involved in patents, and get involved in product-related things. Sun was a unique company that designed hardware, operating systems, and applications, so it gave me a lot of visibility into different layers of the system. After graduation, I became a professor at BU, and I’ve been here since 2009. Some of my research is still looking at energy efficiency, but at different levels of computer systems.

Over the last decade, I started to work on cloud and large-scale systems broadly. I created this new research thread in my group where we started looking into datacenters and energy issues, but eventually my research evolved into “analytics” on large-scale computing systems, like those serving the cloud. Improving energy efficiency is a significant goal, but there are other interesting problems too, like performance issues or vulnerabilities.

Marcel Hild: I like this transition from studying integrated circuits to focusing on energy efficiency, eventually ending up in artificial intelligence and algorithms to improve something. In the end, it all boils down to making things more efficient.

Red Hat’s Collaboratory, which is supporting the AI for Cloud Ops project, is a partnership between Red Hat and BU. Red Hat benefits by having a positive impact on the projects we’re involved in and providing some exposure to the research community. Is there something you hope to gain by working with Red Hat or with industry in general?

Ayse Coskun: Definitely. The funding component is an enabler, so we can have a team dedicated to working on these cool problems. But in the end, what we want is innovative research and impact. Having an industry partner really gives us an edge, because we get feedback on the problems and constraints that are most relevant. Getting this kind of feedback helps us solve actual problems for society, and we can see immediate results on actual products.

Having an industry partner really gives us an edge, because we get feedback on the problems and constraints that are most relevant.

Normally it takes a long cycle for a research idea to get on to a system where it meets real people. This is by design: as researchers, we work on problems that are not immediately the next step in a product cycle. But the cloud is a very specific area. Things change so fast—people design and deploy new tools, applications, and software stacks all the time. Having industry support enables you to see how these research outcomes will work on real systems. Otherwise, we might find a cool technique, but by the time somebody in industry looks at it, it could be obsolete.

That’s an exciting factor, and it excites my team and my students too. PhD students want to solve problems—they want to make a dent in this world. When they see the industry interested in what they’re doing, when they see their method working on an actual system solving a problem, they get super enthusiastic about doing more.

Marcel Hild: That’s also what excites me when working out of the Office of the CTO and with academia—we have that freedom of researching and doing something without product constraints, but it always also goes back to something that can be applied. We don’t just reinvent an algorithm for the tenth time—we actually solve problems. That’s how I came to the AIOps definition that I now hold, that AIOps is not just a product, but a cultural change where we apply AI and machine learning tools to the realm of operations.

Some of the recurring themes in your proposal sound similar to AIOps. Can you talk about why we need AI these days in the domain of operations and in the domain of clouds? Was there something that changed fundamentally?

Ayse Coskun: That’s a great question. We can run a cloud without AI. But several things are now calling for AI and better, more automated approaches. And there are some enablers: we are at a point where AI, as well as the tools and the techniques that come with AI, have reached a certain level of maturity. There’s more expertise all around, and it’s possible to do more complex applied things more easily.

AIOps is not just a product, but a cultural change where we apply AI and machine learning tools to the realm of operations.

The other side is the cloud, and the multiple layers of software running on top of each cloud instance. Things change so fast. We have these continuous integration, continuous deployment (CI/CD) cycles, where people are constantly changing something related to their code. With a traditional approach, you might have an expert who understands the system well, and they write really good code to manage some resource or fix a problem.

But things become so fragile in an environment where software is updated multiple times a day. You can write some script to look for a file that exists, but then the version of the software that kicked in today doesn’t have that file anymore, or the file size changed. Now your script will need to be updated too. Relying on human-involved practices can be very costly. If you have an expert, that means they have valuable information. You are paying those people a lot of money to maintain systems and debug problems.

It’s inefficient. This kind of expertise doesn’t scale. I can’t suddenly train up a hundred people to manage a new system. That’s why it’s the right time to bring more automation into the operations of the cloud.

One clarification: when people talk about machine learning and the cloud, they are often talking about running machine learning applications on the cloud. Here, I’m talking about applying machine learning to cloud operations: how resources and software are managed, how vulnerabilities are determined, how compliance checks are processed, and things like that. Other fields have seen a lot more applied machine learning already. We are recognizing images with AI; there’s AI in robotics and in our shopping assistants. But when we look at how computers themselves are managed, it’s still heavily reliant on human expertise.

Expertise doesn’t scale. That’s why it’s the right time to bring more automation into the operations of the cloud.

Marcel Hild: Some of the recurring themes in your proposal were performance, resilience, and security. You also broke it down into two main areas: software discovery and runtime analysis. Should we start with software discovery?

Ayse Coskun: Yes, but first let me take a step back. What we want to achieve is bringing AI to cloud operations. That’s a broad problem. There are many things one can do, and we want to do this because we want to find performance problems quickly. We want to find vulnerabilities quickly. We want to make systems more resilient: if something is crashing, we want to determine what’s causing it and eliminate it quickly. Software discovery looks into what’s happening in the system in terms of what specific software is running.

Here’s an example. We talked about CI/CD, where people keep changing their code, deploying it on the cloud, and running it. Sometimes this happens through Jupyter Notebooks: I’m doing this data science application, I change a little bit in my code, and I send it and run it again. It used to take five minutes, but for some reason now it takes an hour. Or, I used to have everything working, now it’s crashing. Or, apparently I included a vulnerable application that has a known problem, and eventually it’s going to make me open to privacy leaks.

These are the kinds of things we want to determine right away, even before running the software when possible. Some of the work we’ve done looks into which files were created and modified during software installation. We have efficient techniques to create fingerprints of what’s going on in the system and use machine learning to determine, say, that a certain version of this application shouldn’t run on a certain cloud. Some techniques are also good at looking into the code. Maybe you just used a library or implemented something new in a function. We can get some signatures of that code and see if it is known to cause trouble.

Runtime analytics looks into what’s going on while your application is running on the cloud. Can we collect the right amount of information to understand why something slowed down? Can we get these cross-layer analytics—meaning the information collected from different layers of the cloud? And then can we mesh this data, structure it, and use it to understand if there’s a security breach, or malicious activity, or some problem in the network?

Then we can tie this information back to a specific problem. For instance, I made a change in my software, and now it’s slow. Runtime analytics can tell me what’s driving the latency problem. Then I can tell the developer, “Since you installed this library, or since you included this function in your code, it’s been causing this particular problem.”

This would have tremendous value and save a lot of cycles in debugging and system management effort. These examples can be expanded for performance problems, security problems, and resilience problems.

Marcel Hild: That sounds very complex. You’re not doing it just on your own; you have a team. How many people are on your team for this?

Ayse Coskun: We have three principal investigators (PIs). I’m leading the team. Gianluca Stringhini is an assistant professor here at BU and a system security expert. He brings a lot of value to the project because he has already been doing work that brings machine learning and analytics into security problems and privacy issues.

Alan Liu is also an assistant professor at BU. He’s a networks and systems researcher, so he’s going to bring his expertise in making efficient telemetry work for understanding problems on the network layer. Typically there’s no shortage of data: you can collect data from the application and from the system, you can also get data from the firmware/hardware. The problem is doing this efficiently. If you collect everything, who’s going to look at it? And what kind of overhead does that create? Alan’s work on network telemetry and “sketches” is a game changer, because he captures the relevant information while being very efficient about it.

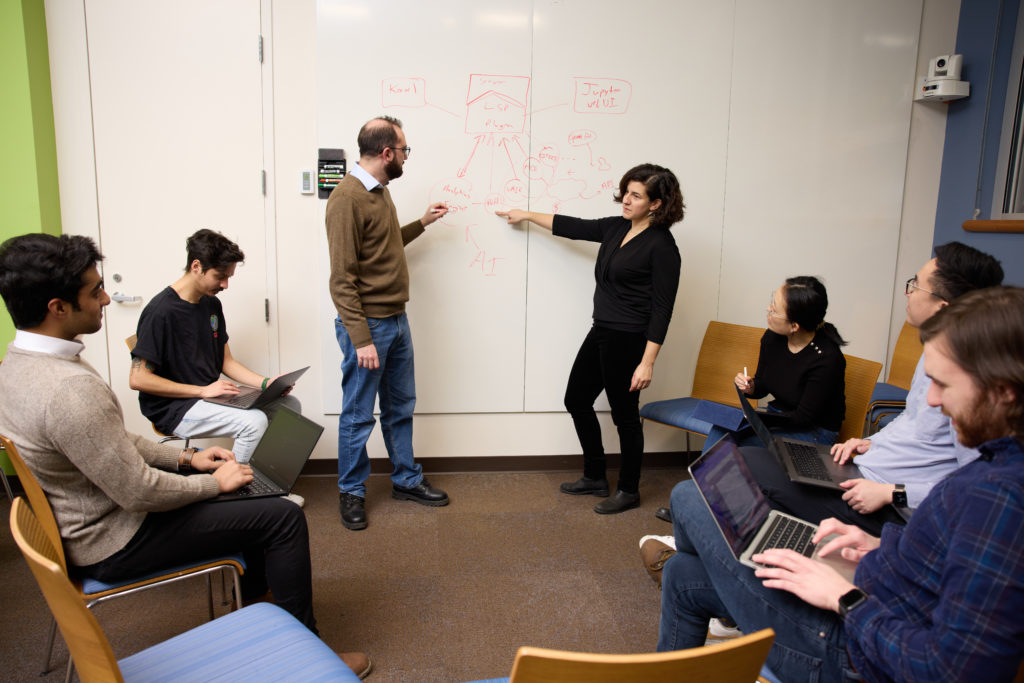

We’ll also have four PhD students on the project: Anthony Byrne, Mert Toslali, Saad Ullah, and Lesley Zhou. Anthony is already interning at Red Hat at the moment. Mert has done some really nice work with IBM Research on automating software experimentation on the cloud. We’ll have some undergraduates working as well. Brian Jung, an undergraduate student, is already working with us on building the first version of a software discovery analytics agent for Jupyter Notebooks.

Marcel Hild: Before this, you’ve been working on different puzzle pieces. Now you’re applying it to the complete stack and a living environment. What is one of the first outcomes you expect there? Will there be a unified user interface, will there be a unified proof of concept where all the things come together?

Ayse Coskun: True, and great questions. We played around quite a bit on these topics prior to this project. We learned some things, we had some ideas that didn’t work, and we saw some success stories. For instance, some of our initial work was focused mainly on virtual machines. Then it focused on containers, then microservices. Now we’ll look into the Operate First environment. Each of these changes brings some specific challenge. We have a lot of know-how, but we’ll need to make it work for these Operate First clouds.

One of the first things we want to demonstrate is a system visualization tool utilizing different kinds of data—cross-layer, telemetry, tracing, runtime—for the developer or system manager. We want to create an interface where this data is captured and then presented back through relevant APIs, so it’s easy for the developer, user, or administrator to query this data.

Another goal is related to software discovery. We have two tools that have already been released to the open source community over the last few years. One is Praxi, which determines whether a software installation has any vulnerable code or prohibited application in it. The other is ACE, or Approximate Concrete Execution. ACE executes functions (approximately) and creates signatures of them very quickly, then searches across libraries of functions known to be problematic, identifies them, and gives feedback to the user. Now, we want to implement and apply similar techniques for efficient searches of software. The efficiency comes from machine learning. Then we want to apply similar techniques for the bring-your-own-notebook concept. People come in with a Jupyter Notebook; they are writing some code. Through these APIs, we want to tell them, “Hey, you just installed something vulnerable, so remove it,” or “You are about to run something that’s known to be buggy.”

Our second year will focus more on getting everything to work together, including the analytics for security, fault diagnosis, and efficient cross-layer telemetry. Eventually we’d like to have a full deployment of our unified API and analytics engines on the cloud systems.

Marcel Hild: I like that you focus on the people that give you feedback. If you provide a visual tool for humans, you get immediate feedback on whether you are solving problems for them or just creating your personal echo chamber where the model is predicting something mathematically but doesn’t bring any value to the user. And you are bringing it to this Operate First community, where you have people using your tools and providing some traffic and usage to prototype and develop what you’re doing. Otherwise you would be working with just synthetic data on hypothetical setup.

How can people reach out to you about this? How do you envision working with the community and engineers?

Ayse Coskun: Another great question. I mentioned earlier that there are now enablers for this research to happen at this scale. Similarly, one thing that enables this line of research is the community embrace of a strong open source culture over the last few years. If everything were proprietary, it would be difficult to make an impact in this space. We need real systems and real users to demonstrate things in a rapidly changing environment.

And how will we make that happen? Obviously we’ll make our artifacts available in places like GitHub, but maybe that is also a question for you! We’d like to work with Red Hat to identify the right communities to push some of these things upstream. Open sourcing is not the end of the story. I can make our work open, but if nobody looks at it, it’s hard to call that success. But if the right tools reach the right communities, that’s where we can make an impact.

One thing that enables this line of research is the community embrace of a strong open source culture over the last few years.

Marcel Hild: If this project is a success, it can act as a role model for how other large projects work. You need the whole platform stack to create something that works; therefore, you need a community where the whole stack is practiced and where it’s available. The Operate First community is growing and can provide that.

Ayse Coskun: One thing I want to add is that Red Hat has already been doing some work in the AI for cloud operations area. One of our strategies is to avoid reinventing the wheel. It’s better to deploy our methods in existing infrastructures, tools, and projects. For instance, we identified Project Thoth, which is managed as part of Red Hat’s AI Center of Excellence, as a place where we can deploy some of our methods.

It’s already there. It has a community around it. So perhaps we can leverage Thoth and similar projects. This is a good way to reach an existing community, but offer them more, as opposed to putting standalone independent code repositories out there that people may or may not use. Instead, the plugin becomes available through something users are already engaged in.

Marcel Hild: Yes, absolutely. It’s AI for operations, and how can you engage with people in operations without operating something?

I also want to discuss a different side of your work. You’re the founder and organizer of the Advancing Diversity in Electronic Design Automation (DivEDA) forum. Tell us about that.

Ayse Coskun: One of the topics I’ve been passionate about in conjunction with my technical work is diversity.

There are far fewer women and underrepresented minority researchers in computer science and engineering in general. I’d like to change this so women and underrepresented minorities have a stronger role in shaping our future in computing.

There are many efforts towards the same goal at different layers: educational efforts, efforts at different companies, and so on. What we did was establish a forum as part of two conferences (one in the US and the other in Europe). The forum included panelists who talked about their experiences and how they overcame difficulties in their careers. There were panelists from different stages of their career, people from industry and academia, people who are more senior or more junior. It was a really lively, interactive environment. We also had some speed mentoring sessions where more senior, more accomplished people met with PhD students or people in the early stage of their careers. The idea was, okay, you meet a couple of people during the session, and maybe next time you see them, you can have more of a conversation and build a mentoring relationship.

Unlike the continuous integration and deployment environment, societal change happens more slowly.

Studies have shown that having a mentor helps a lot in shaping up your career: making sure that you get nominated for the right things, that you attempt to get promoted and go for certain accomplishments. We wanted to create an enabler to facilitate that. We ran the forum a few times, and then the pandemic happened. This year DivEDA is finally back, but it’s going to be virtual.

Marcel Hild: That’s awesome. I would be super excited if you also bring some of these ideas to the AI for Cloud Ops project, or to the Operate First community. The more people we involve, the better. I don’t know if we get there in our lifetime, but I like the idea of making the lack of diversity an obsolete problem.

Ayse Coskun: Yes, I love that idea too. I would love it to become obsolete, but I think it’s going to be with us for a while. Unlike the continuous integration and deployment environment, societal change happens more slowly. <laugh> We can’t deploy it like new software a few times a day, but change does happen. And sometimes in our lifetime!

Marcel Hild: I’m looking forward to working with you in this Operate First context, and in this AIOps context. We’ve finally come to a point where we can go beyond just a proof of concept or a paper. Now we can bring all the stakeholders together and really do something.

Ayse Coskun: I agree. We are very excited and hoping for some cool demonstrations soon.

SHARE THIS ARTICLE

More like this

The name Luke Hinds is well known in the open source security community. During his time as Distinguished Engineer and Security Engineering Lead for the Office of the CTO Red Hat, he acted as a security advisor to multiple open source organizations, worked with MIT Lincoln Laboratory to build Keylime, and created Sigstore, a wildly […]

“How many lives am I impacting?” That’s the question that set Akash Srivastava, Founding Manager of the Red Hat AI Innovation Team, on a path to developing the end-to-end open source LLM customization project known as InstructLab. A principal investigator (PI) at the MIT-IBM Watson AI Lab since 2019, Akash has a long professional history […]

John Goodhue has perspective. He was there at the birth of the internet and the development of the BBN Butterfly supercomputer, and now he’s a leader in one of the toughest challenges of the current age of technology—sustainable computing. Comparisons abound: one report says carbon emissions from cloud computing equal or exceed emissions from all […]

What if there were an open source web-based computing platform that not only accelerates the time it takes to share and analyze life-saving radiological data, but also allows for collaborative and novel research on this data, all hosted on a public cloud to democratize access? In 2018, Red Hat and Boston Children’s Hospital announced a […]

Everyone has an opinion on misinformation and AI these days, but few are as qualified to share it as computer vision expert and technology ethicist Walter Scheirer. Scheirer is the Dennis O. Doughty Collegiate Associate Professor of Computer Science and Engineering at the University of Notre Dame and a faculty affiliate of Notre Dame’s Technology […]

What is the role of the technologist when building the future? According to Boston University professor Jonathan Appavoo, “We must enable flight, not create bonds!” Professor Appavoo began his career as a Research Staff Member at IBM before returning to academia and winning the National Science Foundation’s CAREER award, the most prestigious NSF award for […]

Don’t tell engineering professor Miroslav Bureš that software testing can’t be exciting. As the System Testing IntelLigent Lab (STILL) lead at Czech Technical University in Prague (CTU), Bureš’s work bridges the gap between abstract mathematics and mission-critical healthcare and defense systems. His research focuses on system testing and test automation methods to give people new […]