Cost optimization is a core challenge for users of cloud computing platforms. An open source tool is now available to solve it.

The era of cloud computing has introduced endless possibilities through access to vast amounts of computing power, storage, and software over the internet. This growth has led to a shift towards remote work, collaboration, and the ability for small businesses to compete with larger ones. Cloud computing introduced greater scalability, flexibility, and cost-effectiveness in IT operations, facilitating innovation in data analysis, artificial intelligence, and internet-of-things applications.

However, actually migrating a business to the public cloud and ensuring that cloud resources are utilized in the cheapest and most efficient way has proven to be a highly challenging task. Enterprises must consider many technical, financial, and organizational factors to deploy a complex workload in the cloud, including technical complexity, security and compliance, business continuity, and availability of the necessary skills and expertise. In this article, I will demonstrate a solution for one particularly acute aspect of the above process: cost and resource optimization.

Optimizing the cost of running workloads on a public cloud involves many challenges. One of the main difficulties is understanding cloud providers’ various pricing models, as they vary significantly in their services and associated costs. In addition, workloads that fluctuate and change over time make it challenging to predict usage patterns and optimize resource allocation. Cost optimization requires continuous monitoring and analysis of usage data to identify and eliminate waste, which is often a time-consuming task. Managing contracts and negotiations with cloud providers can also contribute significantly to the overall cost of running workloads. All of these factors make cost optimization a complex and ongoing task requiring dedicated effort and expertise.

An explosion of options

Let’s start with the most fundamental problem. Suppose you have an application ready for deployment to the cloud. Such an application could be arbitrarily complex, contain multiple components with nontrivial dependencies, and involve diverse resource requirements. Assume for this example that resource consumption requirements (such as CPU and memory) are known in advance for all application components. (I will touch on resource requirement estimation later in this article.)

With all these assumptions in mind, how do you select the most fitting cloud provider for this application at the best price? How do you choose the number of virtual machines to acquire, their instance types, regions, and other crucial parameters? How do you compare the various offers from different providers and decide on the most economically reasonable one?

There is no simple answer to the above questions, for a number of reasons. The leading cloud providers offer a variety of instance types and configurations, each with distinct specifications. According to our estimates, for AWS alone there are over 9,000 different virtual machines available for purchase when you take into account all combinations of instance type, region, and operating system. For real-life applications requiring a large number of VMs, the sheer number of possible combinations for deploying specific instances and colocating workload components quickly grows exponentially. It is highly impractical, and often outright impossible, to examine all possible alternatives with a brute force approach and simply pick the cheapest one.

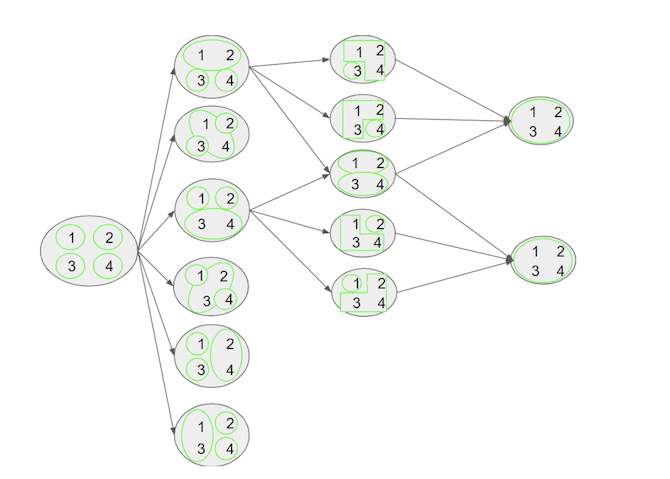

Figure 1 illustrates the problem of estimating the cheapest deployment configuration on a toy example of an application with four components. Here, the numbers denote the components, the green circles represent the VMs, and the black circles represent the different possibilities of allocating components to VMs. The range of allocation possibilities, from using a single VM for the entire application to putting each component on a dedicated instance, is exponential in the number of components. In other words, even for a moderate number of components, the time required to consider all possibilities grows very fast.

In many cases, decision makers simply stick to the same instance types and regions over and over again for all workloads. This strategy could lead to highly inefficient use of resources and significant financial losses, especially given the increasingly dynamic nature of the cloud market. The prices for specific instance types and regions could fluctuate rapidly and unexpectedly—or new, cheaper instance types could be introduced— and opportunities to save costs would be missed.

Efficient selection

This article proposes a different approach. Instead of either being limited to a single configuration or traversing the entire enormous space of possibilities, we can identify and extract a small set containing the most promising candidates. We do this using the advanced methods for combinatorial optimization developed in academia over the past decades. While this method does not guarantee the set will include the absolute cheapest solution satisfying the needs of an application, in the majority of cases it will be sufficiently close.

Cloud Cost Optimizer (CCO) is a project and an open source tool implementing the above paradigm for optimizing cloud deployment costs of arbitrarily complex workloads. The result of a long-term collaboration on cloud computing between Red Hat Research and the Technion, Israel Institute of Technology, CCO brings academic knowledge together with Red Hat expertise to provide a unique solution suitable for all kinds of clients and applications. CCO makes it possible to quickly and efficiently calculate the best deployment scheme for your application and compare the offerings of cloud providers or even the option of splitting your workload between multiple platforms.

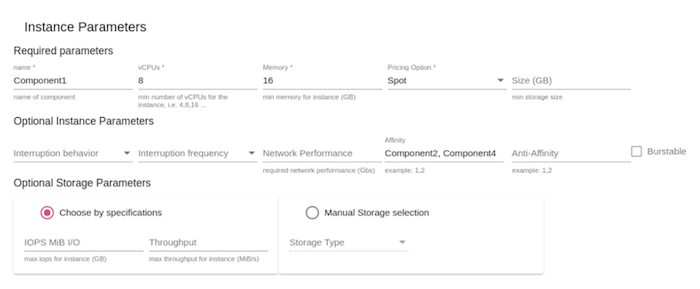

Figure 2 illustrates the input received by the CCO for each component. First, the user provides the resource requirements of the workload in terms of CPU and memory. Other metrics, such as storage and network capacity, could be introduced in a future version. Additional, largely optional input parameters include the relations between the application components (for example, affinity and anti-affinity), the maximum tolerated interruption frequency, client-specific pricing deals for varied cloud providers, and many more. In particular, CCO can be instructed to consider spot instances, allowing customers to save up to 90% of the instance cost while giving up only a small degree of stability and reliability.

After these details are specified, the optimizer analyzes the provided data and calculates the mapping of workload components to VM instances that minimize the expected monetary cost of deploying the application. The user can limit the search to a single cloud provider or choose a hybrid option that considers solutions deploying the workload on multiple providers for a better price. (As of May 2023, CCO supports AWS and Azure. An intuitive and well-documented plugin interface makes it possible to easily introduce support for additional public clouds.)

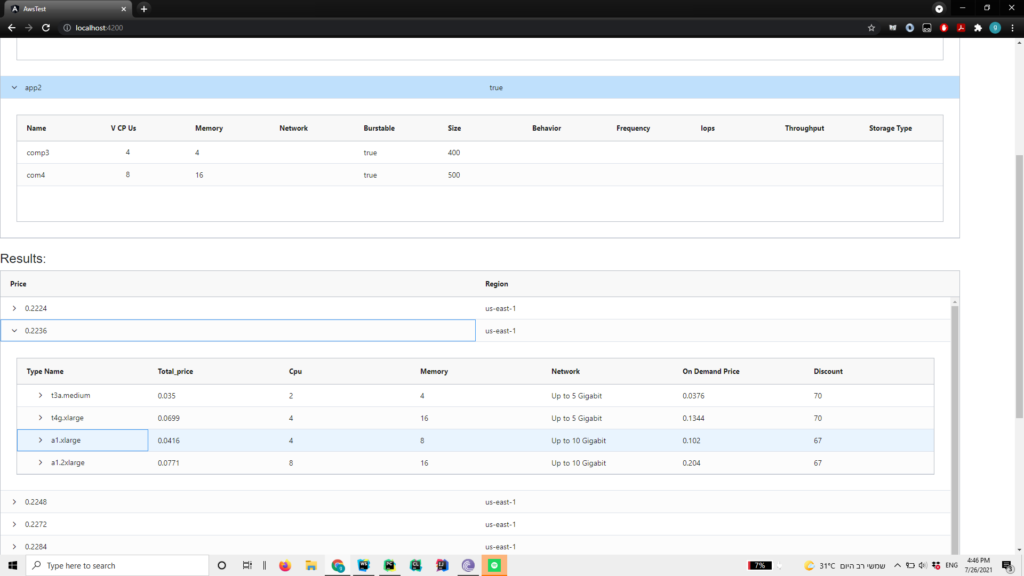

Figure 3 shows a sample result of running CCO on a simple application. Given a user query, CCO produces a list of deployment configurations sorted in order of ascending cost. Each configuration contains a list of instances to be used with a full component-to-instance allocation map.

In addition to the graphic user interface, CCO exports an API and can be executed as a background task or incorporated into a CI/CD pipeline. This is especially useful for incremental deployment recalculations. As discussed above, pricing and availability of instance types are subject to change over time. The only way to ensure maximum cost savings is by periodically executing the cost optimization routine on the fly and making adjustments as needed.

The goal of the CCO project was merely to create a prototype of an innovative cloud cost optimization solution. However, even this prototype can help individual users and enterprises save money in several ways. By calculating and returning the cheapest combination of instances satisfying the client’s specifications, the CCO allows users to minimize the unnecessary costs resulting from selecting a wrong instance type or unintentional overprovisioning. Further, by comparing the deployment options across cloud providers, the tool helps enterprises choose the provider that best fits their workload needs and budget. This could lead to significant cost savings, especially for organizations with many workloads running on multiple cloud providers. Finally, automating selection of the best instances and regions for a given workload reduces the need for manual monitoring and management.

Future enhancements

While a fully functional version of CCO is available for use, there is no shortage of possible extensions and further improvements. Future versions of the cloud cost optimizer could take into account additional considerations such as availability, reliability, compliance, and regulations. In addition, the metaheuristic-based optimization algorithm employed by the current version could be augmented with a machine learning approach such as deep reinforcement learning. State-of-the-art AI/ML tools have the potential to learn from previous usage patterns and make recommendations for future resource allocation, predict future prices of instances based on the market situation, estimate future interruption rates of spot instances, and so on. Incorporating these capabilities into CCO is an exciting and promising avenue for our future work.

One particularly interesting and relevant problem in this context is accurately estimating the resource requirements of cloud workloads. As mentioned above, the CCO requires per-component CPU and memory requirements as the input for its optimization algorithm. However, manually estimating resource consumption patterns is notoriously difficult for most real-life applications. To address this shortcoming, we are working on another tool, codenamed AppLearner. AppLearner utilizes advanced ML techniques to learn the application behavior from past runs and predict future resource consumption, in terms of CPU and memory, over time. The forecasting horizon could lie between mere hours and multiple months, depending on data availability and the target application’s complexity. Ultimately, we intend AppLearner and CCO to work in tandem, with the former’s output serving as the latter’s input. In contrast with CCO, AppLearner is still a work in progress, and we expect the prototype to become available later this year.

Those interested in finding out more about CCO, AppLearner, and the rest of our cloud computing projects, or those looking for collaboration and contribution opportunities, are kindly invited to contact Dr. Ilya Kolchinsky at ikolchin@redhat.com. Details about all Red Hat Research projects can be found in the Research Directory on the Red Hat Research website.